Knowing the Numbers: Assessing Attitudes among Journalists and Educators about Using and Interpreting Data, Statistics, and Research

By John Wihbey and Mark Coddington

[Citation: Wihbey, J., & Coddington, M. (2017). Knowing the Numbers: Assessing Attitudes among Journalists and Educators about Using and Interpreting Data, Statistics, and Research. #ISOJ Journal, 7(1), 5-24.]

Understanding statistics and quantitative information is becoming increasingly important to journalism practice. Drawing on survey data that includes more than 1,000 journalists and 400 journalism educators, this study offers unique comparative perspective on the attitudes of both journalists and educators. Journalists are found to see more value in the use of academic research in journalism, while differences between the groups are not significant on the importance of statistics. Among journalism educators, personal statistical efficacy, rather than possession of a doctorate, best helps predict attitudes in these domains. Implications for data journalism are discussed.

American society is entering an era in which doubt about scientific and factual information is deepening—through the popularization of denial of scientific consensus on issues such as climate change or vaccination, and through the recent election of a president, Donald Trump, who defies journalistic efforts to factually characterize his record and policy positions (Rutenberg, 2016). In this context, thorough and thoughtful journalistic reporting based on analysis of empirical data and systematic information is both more greatly needed and more endangered than ever. It is more necessary because a suggestible public needs nuanced explanation of the foundations and limitations of new knowledge being produced by impartial, research-based communities, and more endangered because a lightning-fast, socially driven news cycle obscures public interest in such careful journalism.

Even as the news media environment has been characterized by increased speed and, at times, both greater superficiality and inaccuracy because of social media-driven trends, there have been broad calls for journalism to become more knowledge-based in its approach (Donsbach, 2014; Nisbet & Fahy, 2015; Patterson, 2013). Scholars and media observers who also emphasize the need for broad technological innovation also concede the simultaneous need for more specialized knowledge to help distinguish news, as the web creates an abundance of competing sources on any given subject (Anderson, Bell, & Shirky, 2012).

The nature of training of future journalists in the context of the current media environment is deeply uncertain, but in many ways the challenges of the industry make long-running and unsettled debates over journalism education all the more urgent and important. What skills should be imparted? How much should journalism schools emphasize subject knowledge over technical skill? Who should be teaching the multi-faceted curricula of the present? And what is the best preparation for educators? All of these questions and more have stirred heated argument in the academy for several decades now (Folkerts, 2014; Folkerts, Hamilton & Lemann, 2013).

As society has become more data-driven across many domains, and as software for analysis of all kinds has become more available and less expensive, new possibilities for journalism have come about (Coddington, 2015). At the same time, academic literature on all subjects has become much more available online, creating a wealth of new interpretive possibilities and ways of communicating with and informing the public (Wihbey, 2016). Science—both its methods and its findings—and statistical reasoning generally have become more valuable, yet neither have been traditional strengths of journalists (e.g., Boykoff & Boykoff, 2004; Maier, 2002; Pellechia, 1997).

Journalism schools offer a key pathway toward improving journalism in these respects, both as the venue in which many professional journalists are trained and as a potentially fruitful connection between the cultures of professional journalism and academic research communities. In particular, as data journalism becomes an increasingly important component of online journalism writ large, there is a growing need for sophisticated training around using and interpreting quantitative information. Yet journalism educators, too, have struggled to implement scientific and statistical literacy for journalists in a substantial way (Cusatis & Martin-Kratzer, 2010; Dunwoody & Griffin, 2013; Martin, 2016). This study asks whether there may be grounds for a convergence in valuing these skills and approaches between journalists and journalism educators—and between factions within journalism educators based on familiarity with scientific and academic research. It investigates attitudes toward the use of research and statistical competency among members of both communities, seeking to locate key points of agreement and disagreement that may have implications for journalism training and practice.

Literature Review

Journalists, Science and Statistics

Journalism has long played a crucial role in the public’s understanding of complex issues and scientific research, with the news media being by far the most prominent way the public hears about science (Hijmans, Pleijter, & Wester, 2003; Schäfer, 2012). News coverage has a major impact not only the public, but on the scientific community itself, influencing professional legitimacy, funding, and public support among scientists (Dunwoody, 1999; Schäfer, 2012). Dunwoody (1999) posits that journalists and scientists have formed a shared culture in which they negotiate responsibility for interpreting and publicly defining scientific research, with journalists taking on more definitional authority in cases of scientific controversy. There is a long tradition of journalists and scholars arguing that journalists and scientists have even more than this in common—a shared method of ascertaining reality. Walter Lippmann (1922/1961) famously advocated for this quasi-scientific approach to testing reality in journalism nearly a century ago, and his call has been taken up since then in a variety of forms, not least in Bill Kovach and Tom Rosenstiel’s (2007) articulation of “objectivity as method” as a crucial element of journalistic practice.

Most prominently, journalist and professor Philip Meyer outlined in 1973 a form of “precision journalism” that borrows heavily from the methods of social science. Meyer argued that scientists and journalists share a fundamental orientation toward reality testing, using mindsets and methods built around skepticism, replicability, and operationalization. Precision journalism, Meyer wrote in the 2002 edition of his guide on the subject, “means treating journalism as if it were a science, adopting scientific method, scientific objectivity, and scientific ideals to the entire process of mass communication” (p. 5).

Yet for all this overlap in topic, purpose, and in some cases culture and method, journalists’ record in covering scientific research is remarkably spotty. Journalistic interest in science grew steadily from the 1960s through the 1980s, spurred in part by the space race and environmental concerns (Dunwoody, 2014; Pellechia, 1997). Since then, journalistic references to scientific research have remained largely steady (though very scarce in broadcast news), but have shifted heavily toward medical and health research (Bauer, 1998; Dunwoody, 2014; Pellechia, 1997). A major exception to this is journalism’s heavy emphasis on political polling, which falls under the purview of social science. Journalists have made polls a significant part of their political coverage, though they tend to cover them as pieces of political information rather than science per se, with very little information on their methods and scientifically based limitations (Rosenstiel, 2005). Peer-reviewed social science is rarer: In a study of Dutch newspapers, Hijmans and colleagues (2003) found that social science was the most-covered scientific area, but it dropped behind medical research when non-university studies were excluded.

Across subjects, researchers have found that journalists have had substantial problems conveying the findings and characteristics of scientific research accurately and clearly. Early studies on accuracy of scientific news coverage found it riddled with errors, particularly from the scientists’ perspective (Dunwoody & Scott, 1982; Singer, 1990; Tankard & Ryan, 1974). In recent years that concern has shifted toward the amplification of dissenting voices and undue doubt cast on scientific research in the name of journalistic balance, with journalists often using the frameworks of political journalism to cover scientific issues and constructing ignorance rather than knowledge (Boykoff & Boykoff, 2004; Boykoff & Mansfield, 2008; Stocking & Holstein, 2009). Across all of these cases, the most consistent complaint regarding news coverage of science is that is simply incomplete—bite-sized coverage of scientific research, with little attention to the context in which a study appears or the process by which it was produced (Pellechia, 1997). News coverage of science tends to overemphasize studies’ uniqueness and scientific contribution and dramatically downplay their limitations, with minimal space devoted to their methods (Dunwoody, 2014; Hijmans et al., 2003; Pellechia, 1997).

The lack of attention to research methods in particular has a variety of causes: Journalists are eager to emphasize the newsworthiness of their story subjects and minimize any elements that might detract from that; they trust their sources and often unquestioningly adopt their ways of understanding reality (Dunwoody, 2014; Pellechia, 1997). But just as significantly, journalists’ circumvention of methodological details reflects their long-held avoidance of statistics and numbers more broadly. “Mathematical incompetence among journalists is legendary,” notes Maier (2002, p. 507), and his study provides some weight to that popular conception, finding dozens of types of mathematical errors in a month-long review of a single American newspaper, many of them very simple.

Yet despite the documented difficulties journalists display with numbers, there is also evidence that journalists’ fear of numbers outstrips their actual inadequacy with them. In a study of the same newspaper, Maier (2003) found that a lack of mathematical confidence persisted even among journalists who performed well on a numeracy assessment or who had received good grades in math in school. This lack of confidence can lead journalists to avoid mathematical or statistical representations in news, even ones they may be capable of doing accurately themselves (Curtin & Maier, 2001; Livingston & Voakes, 2005). That this fear of numbers leads to an avoidance of statistics is especially unfortunate because mathematics and statistics are different things, and an understanding of statistics does not necessarily require an understanding of advanced mathematical functions. As Nguyen and Lugo-Ocando (2016) note, because most of the datasets that journalists use are pre-packaged, all journalists often need to evaluate and accurately present it is a questioning mindset and basic statistical reasoning.

This phobia has damaging consequences for journalism, not only in the science news inaccuracies and omissions described above, but for news coverage across a variety of subjects, ranging from business to politics to sports. Now at least as much as ever, news is quite often built around numbers. “Even when we journalists say that we are dealing in facts and ideas, much of what we report is based on numbers,” note Cohn, Cope, and Runkle (2012, p. 3) in their guide to statistics for journalists. In addition to allowing journalists to avoid errors and more fully report on number-related stories, a facility with figures gives journalists more independence from their sources, allowing them to evaluate and interpret statistical information for themselves without relying on the source that provided it to them. Journalists widely recognize the importance of numeracy and statistical literacy, even those who are anxious about numbers in their work (Curtin & Maier, 2001; Livingston & Voakes, 2005). And while innumeracy and statistical anxiety persist among journalists, statistical reasoning is taking on a greater prominence within several forms of journalism through the rise of data journalism and computational journalism (Coddington, 2015).

The political analysis of Nate Silver and his colleagues at FiveThirtyEight, in particular, has brought to bear the methods of social science on political polls through a more statistically sophisticated approach to evaluating survey data, and newsrooms around the world, large and small, have incorporated data analysis and visualization more deeply into their everyday newsgathering operations (Fink & Anderson, 2015). Despite the weight of decades of journalists’ recorded and perceived statistical inadequacy, it remains possible for them to develop a more thorough approach to both statistics and scientific research in their work.

The Journalism-Academy Divide

The relationship between the news industry and journalism education is an important link in explaining and resolving these deficiencies. The realm of journalism education represents the closest point of contact many journalists have with the intellectual and (social) scientific work of academy—it is the academic realm in which many of them were educated and the one whose research most closely relates to their own professional worlds. And it is also the most appropriate place to spearhead the fight against journalists’ fear of and difficulty with statistics and numbers. But academic journalism education has faced its own set of pressures and challenges that have made it difficult to guide journalists toward deeper and more sophisticated coverage scientific and statistical issues.

Journalism education is defined by its marginality. It is caught between the academy and the profession—too skills-based and professional to be granted full legitimacy by the academy and the universities of which they are a part, and too bound up in the culture and priorities of academia to be seen as properly useful by the news industry. The result is a field without clout in the academy and often disdained by the profession it was established to serve (Deuze, 2006; Hamilton, 2014; Reese, 1999). The criticism of journalism education has been withering at times from within the industry (Lewis, 1993), elsewhere in the academy (Hamilton, 2014), and from within, as when communication professor Robert Picard told a gathering of journalism educators in 2014 that journalism education “has not developed a fundamental knowledge base, widely agreed upon journalistic practices, or unambiguous professional standards. Large numbers of journalism educators have failed to make even rudimentary contributions toward understanding the impact of journalism and media on society” (2014, p. 5).

The critiques from the news industry have been the most persistent and salient. Journalists often see journalism education as unnecessary for or even irrelevant to their work; a 2013 Poynter Institute survey found that while 96% of journalism educators saw a journalism degree as very or extremely important for understanding the values of journalism, just 57% of professionals said the same (Finberg, Krueger, & Klinger, 2013). In recent years, that derision has centered on journalism schools’ perceived inability to adapt to rapid technological changes in the industry (Folkerts, 2014; Lynch, 2015).

Complaints about journalism education’s failure to keep pace with (or even lead) the profession have often been rooted in a concern that journalism education is too academic—too focused on research, and too highly valuing academic credentials over professional experience. This critique has echoed through the decades, going back even to the origins of journalism education in the late 1800s, and it was most famously expressed in the Freedom Forum’s 1996 Winds of Change report, which called for journalism programs to de-emphasize theoretical courses and add faculty with deeper professional experience (Fedler, Counts, Carey, & Santana, 1998; Folkerts, 2014; Medsger, 1996). Some academics have met this criticism with the argument that journalism education has been stifled by the opposite—being too beholden to the profession, allowing the news industry to dictate its goals and focus overwhelmingly on producing entry-level news workers, to the detriment of sustained intellectual activity and innovation (Picard, 2014; Reese, 1999).

Some of that conflict between professionals and journalism educators has centered on the doctorate in particular as a marker of academic fitness (or obsolescence, as the case may be). For many professionals, the doctorate represents an unnecessarily onerous barrier to entry into journalism education that privileges esoteric research and keeps out experienced and qualified professionals. For many journalism academics, the degree “demonstrates a commitment to the academic profession and cultivation of certain habits of mind and inquiry” (Reese, 1999, p. 86). This split has long run through many journalism programs, with academics and professionals—often marked as those with and without doctorates—struggling for power over the program’s hiring, resources, and curriculum (Folkerts, 2014; Reese & Cohen, 2000). The Winds of Change report argued that a substantial number of journalism school faculty had doctorates without relevant professional media experience and that many faculty members said a doctorate shouldn’t be a necessary criterion for hiring (Medsger, 1996). Fedler and colleagues (1998) found that while most journalism faculty members had doctorates (between 66% and 89%, depending on specialty), many of those doctoral degree holders also had substantial professional experience. Many journalism schools have opted to take what Reese calls an “ecumenical view” (1999, p. 80), hiring both those with doctorates\and without, though this has not necessarily resolved the tension between the two groups.

Statistical Education of Journalists

Journalism education’s status straddling the theoretical and the practical and its attempts to keep up with a rapidly changing industry have left many programs with substantial challenges in providing training in a full complement of journalistic skills and concepts, and statistical reasoning and numeracy are no exception. Several studies have found that statistical and mathematical training for journalism students is lackluster, and often outsourced to other academic units. Cusatis and Martin-Kratzer (2010) found that 64% of U.S. journalism programs required three or fewer general education credits in math, and just 12% offered a math-oriented course within their own units. Of those journalistic math courses, two-thirds were elective. Regarding statistics, in a survey of administrators, Dunwoody and Griffin (2013) found that 36% of U.S. journalism programs required most or all of their students to take a statistical reasoning course within journalism, while Martin (2016) found in a review of program requirements that just 19% of U.S. programs required journalism majors to take a statistics course, and none of them offered a course themselves that met the requirement. Journalism administrators preferred to incorporate math and statistical reasoning into a variety of courses rather than a standalone, though the depth of that incorporation is unclear (Cusatis & Martin-Kratzer, 2010; Dunwoody & Griffin, 2013).

Journalism educators may not offer much in the way of statistical and mathematical training, but they’re eager to publicly affirm its importance. In surveys, large majorities of journalism educators have repeatedly asserted the importance of statistical literacy and numeracy for journalists—even more than journalists themselves (Cusatis & Martin-Kratzer, 2010; Dunwoody & Griffin, 2013; Finberg et al., 2013). “Administrators seem convinced of the importance of such learning but remain unwilling or unable to muster training responsive to the need,” note Dunwoody and Griffin (2013, p. 535). So what’s holding them back? Researchers have cited a number of factors, some of them structural: Lack of room in the curriculum—sometimes exacerbated by accreditation requirements—has been cited as a chief factor (Cusatis & Martin-Kratzer, 2010; Griffin & Dunwoody, 2016). Another major obstacle cited by educators is a distaste for such subjects among their students. Many administrators have said their students try to avoid statistical or math training, or lack even the basic mathematical aptitude to do well in such courses (Berret & Phillips, 2016; Cusatis & Martin-Kratzer, 2010; Dunwoody & Griffin, 2013). Data show the latter concern in particular is unfounded; journalism students enter college with no worse mathematical scores on college entrance exams than their peers in other disciplines (Dunwoody & Griffin, 2013; Nguyen & Lugo-Ocando, 2016).

Journalism students’ aversion to statistical training may well be fueled in part by their instructors’ lack of emphasis of the skill as a crucial component of good journalism. Administrators have expressed skepticism that their faculty have the expertise to teach data analysis skills (Berret & Phillips, 2016; Yarnall, Johnson, Rinne, & Ranney, 2008). Dunwoody and Griffin (2013) found that 53% of journalism education administrators said most of their faculty would have difficulty teaching statistical reasoning in their courses, though another study rated it as a low concern (Cusatis & Martin-Kratzer, 2010). A lack of faculty expertise in data and statistical reasoning might seem an odd barrier in a field whose research is primarily quantitative social science, though many journalism educators are also former journalists, having come from the often statistically apprehensive culture of the traditional newsroom.

In the classroom, too, the rise of data journalism creates the possibility for a surge in statistical and numerical education for journalists. With demand for data skills rising within the news industry, many journalism programs are scrambling to respond, whether that involves developing entirely new programs or concentrations (such as Columbia University’s dual master’s program in journalism and computer science) or new courses in data analysis and visualization (Griffin & Dunwoody, 2016). With both the profession and academy moving on parallel tracks toward greater emphasis on statistical reasoning and data-driven forms of evidence, this study examines the degree to which the perspectives of professionals and journalism educators diverge regarding the value of academic research and statistical analysis in journalism, as well as the differences between journalism educators with and without extensive academic backgrounds. To that end, we pose the following research questions:

RQ1: What is the relationship between the attitudes of professional journalists and journalism educators regarding the value of academic research in journalism?

RQ2: What is the relationship between the attitudes of journalism educators with and without doctoral degrees regarding the value of academic research in journalism?

RQ3: What is the relationship between the attitudes of journalism educators with and without statistical efficacy themselves regarding the value of academic research in journalism?

RQ4: What is the relationship between the attitudes of professional journalists and journalism educators regarding the value of statistical literacy in journalism?

RQ5: What is the relationship between the attitudes of journalism educators with and without doctoral degrees regarding the value of statistical literacy in journalism?

RQ6: What is the relationship between the attitudes of journalism educators with and without statistical efficacy themselves regarding the value of statistical literacy in journalism?

Method

Sample

The survey took advantage of unique access to a wide distribution list assembled by Harvard University’s Journalist’s Resource project, run by the Shorenstein Center for Media, Politics and Public Policy. The online survey was sent out in September 2015 to a large initial list of more than 20,000 individuals in an attempt to recruit the target population of journalists and journalism educators. Access to this list was provided at no cost. Two email reminders about the survey were sent over the course of the subsequent three weeks.

The list included 8,964 identified journalists and media educators, as well as other academics, researchers, information industry workers, government employees, students, and others. The survey featured an initial screening question that asked respondents to identify their profession/roles. There were 2,140 respondents who self-identified in the journalist and media educator categories (these included freelancers/part-time journalists and communications instructors), yielding a response rate of 24% among those groups. Of course, it is possible there were journalists or media educators on the initial list who were not identified, which would bring down the response rate among those groups. There were 1,118 full-time working journalists and 403 respondents who self-identified as journalism instructors. Two adjacent groups were excluded from the analysis: Those who work in a profession other than journalism but do occasional freelance work (N = 461), and 158 respondents who identified themselves as mass communication instructors. This analysis, therefore, has the advantage of comparing two core groups and relatively undiluted samples: those who work full-time in journalism with those specifically teaching the practice of journalism.

The survey results represent an online convenience sample, but the underlying characteristics mirror those of the general population of U.S. professional journalists. For example, the 1,118 journalists surveyed were 46% female, slightly higher than the proportion of women found in U.S. newspapers, though close to the proportion found in TV and online-only newsrooms (ASNE Diversity Survey, 2016; Papper, 2016). The median age in journalism has also been rising and stands at about 47 (Willnat & Weaver, 2014). About 62% indicated they had worked in journalism for 20 years or more, with an additional 21% having 10-19 years of experience and 17% with fewer than 10 years. If experience measurements are used as proxies for age, the survey sample does not skew substantially toward older or younger journalists. Further, 93% of journalists in the sample indicated that they have a bachelor’s degree; this is virtually identical to 92% figure that Willnat and Weaver (2014) produced in their random sample. (Among journalists examined in this paper’s analysis, 49 indicated they had a Ph.D. or other doctoral degree, 412 had a master’s degree, 579 had a bachelor’s, and 78 had less than a bachelor’s.) Of the journalists analyzed in this study, 545 worked in newspapers, 140 in television/video, and 138 in audio/radio/podcasts, a distribution across news media that also roughly corresponds with the Willnat and Weaver (2014) survey.

Likewise, the group of 403 journalism instructor survey respondents appear to be roughly in line with other surveys of journalism educators. The group was 51% female, which compares closely to the most recent ASJMC annual survey total of 46% female journalism educators (Becker, Vlad, & Stefanita, 2015). In our sample, 48% were at research universities, 48% at teaching-oriented or liberal arts institutions, and 3% at community or technical colleges. In terms of their primary preparation for work, 46% cited having been a professional journalist, 15% cited an academic advanced degree, 30% cited a mix of professional journalism and a training degree, and 8% cited a communications background. The distribution of academic degrees among our sample diverges from the ASJMC sample; specifically, 35% of the educators in our sample indicated they had a Ph.D., 50% had a master’s degree, and 14% had a bachelor’s degree, whereas the ASJMC survey indicates 55% of educators with a Ph.D., 35% with a master’s degree, and 8% with a bachelor’s (Becker, Vlad, & Stefanita, 2015). The ASJMC survey, however, includes all types of faculty at journalism and mass communication programs, including many professors who predominantly teach mass communication, often at the graduate level, who are more likely to have Ph.D.s, while ours focuses strictly on journalism instructors.

Variables

The attitudes of journalists and educators were solicited together on the survey on a group of questions for the purposes of comparing evaluations of the importance of research and statistics in journalism. For the first of the three dependent variables, regarding the value of academic research, respondents were asked how much value they believed academic research has for journalists in five items: 1) Deepening story context; 2) Improving the framing of a story; 3) Strengthening the story’s accuracy; 4) Providing ideas for follow-up stories; 5) Countering inaccurate or misleading source claims. For each response, they were given the following choices: Very important; Somewhat important; Not very important. For the purposes of a proportion test, in each case answers indicating “very helpful” were compared against the aggregate of the other two and a proportion test was conducted. The five items were combined into a single variable for overall value of academic research for journalism (Cronbach’s α = .881).

The other two dependent variables measured how important journalism educators rated statistical knowledge or literacy in journalism. Respondents were asked how important it is for journalists to be able to do statistical analysis on their own and interpret statistics generated by other sources. For these variables, too, answers were recoded into two groups: “very important” in one group and both “somewhat important” and “not very important/unimportant” in the other. There was a third question in this survey section regarding the importance of using statistical knowledge for the purposes of interpreting research studies, but its findings were excluded here, as it did not contribute to internal consistency among the three questions (Cronbach’s α = .553) and therefore the variables could not be combined.

One independent variable employed in the analysis was simply the attainment of a doctorate. The other independent variable was a combined measure relating to respondents’ own assessment of their ability to perform data analysis. They were asked how equipped they were on three measures: 1) Do statistical analysis on your own; 2) Interpret statistics generated by other sources; 3) Interpret research studies. The choices offered were: Very well equipped; Somewhat equipped; Not well equipped. The three items were combined into a single variable, statistical efficacy (Cronbach’s α = .885). In addition, the respondents’ gender (51.1% female) was included as a variable because gender has been associated with math and statistical anxiety among journalists and others (e.g., Maier, 2003).

To compare journalists and educators as groups, the data were analyzed through a chi-square proportion tests. Further, in order to examine the underlying causes of attitudes among educators, a binary logistic regression analysis was performed to evaluate the relationship between educators’ attitudes and both their educational attainment level and their statistical efficacy. Analysis was conducted via SPSS.

Results

Overall, journalists saw much more promise in the power of academic research to help improve journalism practice as compared to educators, though there were few significant differences between journalists and journalism educators on questions regarding the importance of statistical literacy for journalism. Among educators, there were two significant positive associations between statistical efficacy and their attitudes about both academic research and statistics; however, degree attainment showed no significant association.

RQ1 addressed the relationship between attitudes among professional journalists and journalism educators regarding the value of academic research in journalism practice. Across the five combined items measuring the perceived value of academic research for journalism for RQ1, 47.7% of journalism educators (n = 403) rated academic research “very helpful” for journalism on various measures compared to 66.4% for journalists (n = 1,118), a statistically significant difference (χ2 = 180.71, df = 1, p < .001). Professional journalists, then, showed stronger support for the importance of academic research in journalism practice than did educators.

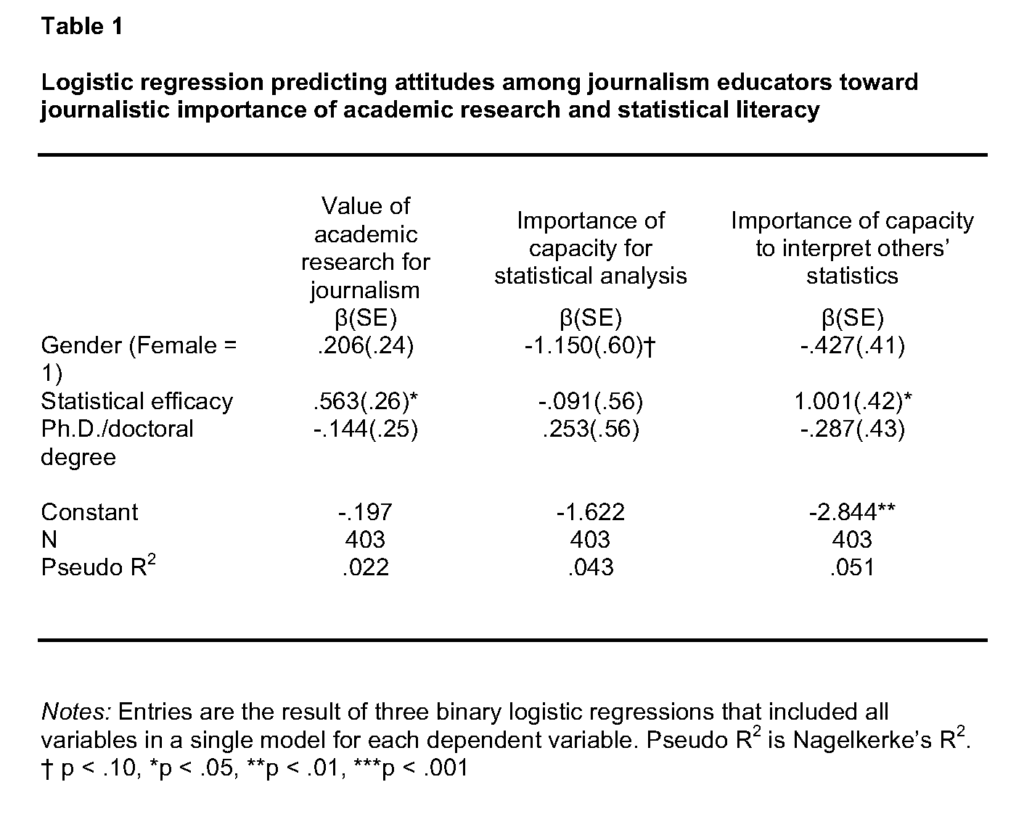

We then examined differences among journalism educators’ attitudes about the value of research for journalists, both regarding their own educational attainment (RQ2) and their statistical efficacy (RQ3). As the results in Table 1 show, whether or not a journalism educator has attained a doctoral degree was not a significant predictor of support for the idea that academic research has value for journalists in logistic regression analysis (β = -.144, p = .953), but educators with more statistical efficacy were significantly more likely to see the value of academic research in journalism (β = .563, p < .05).

RQ4 addressed the differences between professional journalists and journalism educators on their attitudes toward the value of statistical literacy for journalism. The statement that journalists should be able to “do statistical analysis on their own” drew about the same amount of support as “very important” from journalists (40.6%, n = 881) and educators (35.7%, n = 342; χ2 = 2.341, df = 1, p = .126). Likewise, journalists and educators were about equally likely to rate as “very important” that journalists should be able to “interpret statistics generated by other sources” (80.2% of journalists, n = 882; 78.6% of educators, n = 341), a non-significant difference (χ2 = 0.283, df = 1, p = .595).

On these items, too, we tested differences in attitudes toward statistical literacy among journalism educators between those with and without a doctoral degree (RQ5) and those with greater or lesser statistical efficacy (RQ6). In logistic regressions, having a doctoral degree did not predict that a journalism educator would see greater value in statistical literacy for journalism (see Table 1), either to do statistics on their own (β = .253, p = .649.) or to interpret statistics generated by other sources (β = -.287, p = .501). But journalism educators with more statistical efficacy themselves were significantly more likely to value the journalistic ability to interpret statistics generated by other sources, (β = 1.001, p < .05). However, educators with more statistical efficacy were not more likely to see the value of journalists’ ability to do statistical analysis on their own (β = -.091, p = .870).

As a control variable in the logistic regressions, gender did not play a significant role in predicting attitudes on the value of academic research or statistical literacy among educators. We also conducted proportion tests based on male and female respondents’ perception of the usefulness of academic research and statistical literacy, and there no significant difference by gender among educators, though among journalists, female respondents were more likely to rate academic research (χ2 = 50.597, df = 1, p < .001) and statistical literacy (χ2 = 12.063, df = 1, p < .001) “very important” for journalism.

The overall effect sizes of the regression models were limited, with the largest Nagelkerke’s R2 being .051 for the importance of journalists being able to interpret statistics generated by other sources.

Discussion

This study has two primary findings regarding attitudes toward academic research and statistical literacy, one that might be expected and other more counterintuitive. Somewhat surprisingly, journalists placed a higher emphasis than journalism educators on the value of academic research to journalism, even though the journalism educators are the ones operating within the academy. Among journalism educators, those attitudes did not significantly vary based on possession of a doctoral degree, though educators’ statistical efficacy was a significant predictor of valuing academic research and statistical literacy for journalists.

To understand why journalism educators had less faith in the power of university-generated research insights than their counterparts in the industry, it is important to consider that those educators’ backgrounds tend to be more professional and less academic than their peers in the academy. About three-quarters of the 403 journalism educators surveyed overall reported getting some significant part of their primary training for academia through the media industry: 46.1% cited professional journalism as their primary training, while another 30.5% cited a mix of journalism and academic training. Few (15.1%) reported coming to college or university teaching purely through academic training, with 35% of the sample reported having a doctorate. Many may have left the industry too long ago to witness from the newsroom the flurry of very recent knowledge-related innovations enabled by the Internet—whether the rise of online scholarly databases, the movement toward open-access online publishing of research, or the open publication of millions of datasets—and to see first-hand their importance to a quickly changing news industry.

Another explanatory hypothesis for these results, which merits further investigation, may be that journalists and journalism educators have different conceptions of academic research as it relates to the practice of journalism. Working journalists may increasingly see the value of incorporating research-based knowledge from across disciplines into their stories (and into their own backgrounding and sourcing routines), while journalism educators—perhaps habituated to conceive of academic research more in terms of communications and journalism studies—may have their own field in view and thus acknowledge the relative narrowness of much media-oriented research. In other words, contained in these results may be an implicit critique of journalism studies research by scholars themselves as it relates to journalism practice. Again, though, such a hypothesis would need to be confirmed by more detailed survey research. It may be notable, however, that there were no significant differences between journalists and journalism educators in the perceived journalistic value of statistical literacy; divergence only emerged regarding academic research, which may indicate that the two groups had different referents in mind when considering that research.

Among journalism educators themselves, this study suggests that the old division between the academic “chi-squares” and the professional “green eyeshades” has softened and become more complex, at least on this issue (Highton, 1989). Where one might expect the academically based journalism educators to be more statistically minded and research-inclined, we found no significant differences between those with and without doctoral degrees. This matches the trajectory of quantitative journalistic education over the past two decades: while mass communication researchers continue to push into more statistically sophisticated modes of inquiry, many of the field’s leaders in computer-assisted reporting and data journalism instruction—such as Brant Houston, Steve Doig, and Sarah Cohen—have come straight from the news industry. In addition, it’s possible that quantitatively minded, Ph.D.-holding academics have compartmentalized their statistical knowledge, seeing it as something that applies to their mass communication research more readily than journalism itself. In this sense, it is notable that while research methods courses in journalism and mass communication programs are almost invariably taught by a faculty member with a doctorate, many programs have turned to adjuncts—that is, working journalists—to teach the skills of literacy in data and statistics as they apply to journalism (Berret & Phillips, 2016).

Rather than education, it was statistical efficacy that predicted a perception of high journalistic value for academic research and statistical literacy among journalism educators. This is an intuitive finding; it is not surprising that those who are more confident in their statistical skills are more likely to see value in academic research and statistical literacy. But coupled with the lack of significant differences based on educational attainment, this finding suggests that the ability to feel comfortable with statistics and the inclination to see them and academic research as valuable to journalism are not characteristics endemic to either academic or professionally oriented journalism educators. Instead, the facility with and passion for statistics and research in journalism can emerge from both academic and professional settings and find distinct applications both in the academy and the newsroom.

Conclusion

This study offers a glimpse into the attitudes of both journalists and educators regarding two increasingly important elements of journalism: its use of academic research and its fluency with statistical analysis and reasoning. In doing so, it is not without limitations. First, the study is based on a convenience sample of subscribers to a mailing list regarding scholarship relevant to journalists, media practitioners, and students. The respondents to the survey, then, are more likely than most journalists and educators to be interested in academic research and to see value in statistical literacy. While this does not compromise the integrity of the statistical analysis and comparisons conducted within the sample, it does call for extreme caution in extrapolating the results to groups beyond this sample. Future research should address these questions with a random sample in order to produce a more generalizable result.

Second, several of the measurements are quite general; measures such as statistical efficacy and the value of statistical literacy in journalism could be deepened and broadened to include more dimensions and finer distinctions. Future research in this area could expand these variables and include other potentially helpful ones, such as years of journalism experience, years of teaching experience, or others measuring actual, rather than perceived, facility with statistics and research.

Despite these limitations, this study makes an important contribution to the literature in this area: It is the first to compare opinions of journalists and journalism educators on the value of academic research and statistical literacy in journalism (though Finberg et al. [2013] provide some basic numbers from the two groups on attitudes toward data analysis). It is also the first to examine the role of statistical efficacy and degree attainment in journalism educators’ attitudes towards those subjects. In doing so, it fleshes out the picture given by journalism program administrators (Cusatis & Martin-Kratzer, 2010; Dunwoody & Griffin, 2013) and by journalists (Curtin & Maier, 2001; Dunwoody, 2014) regarding the attitudes toward and obstacles to greater statistical and research literacy among journalists and educators. Specifically, it suggests that both priority and efficacy gaps may exist in this area within journalism programs: some journalism educators, even those with Ph.D.s, simply may not see academic research as particularly useful or important for journalists. Others may have limited confidence in their own statistical literacy, which may lead them to place a lower value on those skills for journalists as a whole. As data journalism continues to grow in importance toward the success of online journalism, these insights help put issues of receptivity, competency, and training in sharper perspective.

Journalism is going through a period of great disruption and promise, a time when business models are perilous and public trust in the news media runs low, but when the opportunities for experimentation in form and function are unprecedented. Innovative data journalism units like FiveThirtyEight and The New York Times’ The Upshot have shown that facility with data—and the academic research through which so much of that data is generated—is crucial in seizing those opportunities to push journalism forward. Many areas of journalistic coverage, politics perhaps chief among them, could benefit from a more knowledge-based approach and a better grasp of the relevant literature, making them less subject to the claims of biased and partisan sources (Nyhan & Sides, 2011).

As journalists develop their newsrooms and as journalism educators revise their curricula and staff their departments, it is important to determine what types of people are most inclined to use research and data productively and what obstacles might exist in incorporating these resources. For journalism programs in particular to advance their training in statistical literacy and science and knowledge-based journalism, it is crucial to close both the priority and efficacy gaps by emphasizing those skills as valuable parts of journalism and by training and empowering instructors to bring them dynamically into the classroom.

John Wihbey is an assistant professor of journalism and new media at Northeastern University School of Journalism, where he teaches in the Media Innovation program. Previously, he oversaw the Journalist’s Resource project at Harvard’s Shorenstein Center on Media, Politics and Public Policy and served for four years as a lecturer in journalism at Boston University. A former radio producer and newspaper reporter, he has written for a range of publications, including Nieman Journalism Lab, Yale Climate Connections, The Boston Globe, The New York Times, The Washington Post, Pacific Standard, and The Chronicle of Higher Education.

Mark Coddington is an assistant professor of journalism and mass communication at Washington and Lee University. He was a newspaper reporter in Nebraska before earning his Ph.D. in journalism from the University of Texas at Austin in 2015. He is a contributor to the Nieman Journalism Lab at Harvard University, where he wrote a weekly piece from 2010 to 2014. His research has been published in journals including Mass Communication and Society, Journalism & Mass Communication Quarterly, Journalism Studies, and the International Journal of Communication.

References

Anderson, C. W., Bell, E., & Shirky, C. (2012). Post-industrial journalism: Adapting to the present. New York, NY: Tow Center for Digital Journalism, Columbia University Graduate School of Journalism. Retrieved from http://towcenter.org/research/post-industrial-journalism-adapting-to-the-present-2/

ASNE 2016 Diversity Survey (2016, September 9). ASNE. Retrieved from http://asne.org/content.asp?contentid=447

Bauer, M. (1998). The medicalization of science news—from the “rocket-scalpel” to the “gene-meteorite complex.” Social Science Information, 37(4), 731751.

Becker, L. B., Vlad, T., & Stefanita, O. (2015). Professionals or academics? The faculty dynamics in journalism and mass communication education in the United States. Presented to the International Conference, Media and the Public Sphere: New Challenges in the Digital Era. Lyon, France, June 15-16. Retrieved from http://www.grady.uga.edu/annualsurveys/Supplemental_Reports/BeckerVladStefanita_Lyon062015.pdf

Berret, C., & Phillips, C. (2016). Teaching data and computational journalism. New York, NY: Columbia Journalism School. Retrieved from https://journalism.columbia.edu/system/files/content/teaching_data_and_computational_journalism.pdf

Blom, R., & Davenport, L. D. (2012). Searching for the core of journalism education: Program directors disagree on curriculum priorities. Journalism & Mass Communication Educator, 67(1), 70-86.

Boykoff, M. T., & Boykoff, J. M. (2004). Balance as bias: Global warming and the US prestige press. Global Environmental Change, 14, 125-136.

Boykoff, M. T., & Mansfield, M. (2008). ‘Ye olde hot aire’: Reporting on human contributions to climate change in the UK tabloid press. Environmental Research Letters, 3(2), 1-8.

Coddington, M. (2015). Clarifying journalism’s quantitative turn: A typology for evaluating data journalism, computational journalism, and computer-assisted reporting. Digital Journalism, 3(3), 331-348.

Cohn, V., Cope, L., & Runkle, D. C. (2012). News and numbers: A writer’s guide to statistics (3rd ed.). Malden, MA: Wiley-Blackwell.

Curtin, P. A., & Maier, S. R. (2001). Numbers in the newsroom: A qualitative examination of a quantitative challenge. Journalism & Mass Communication Quarterly, 78(4), 720-738.

Cusatis, C., & Martin-Kratzer, R. (2010). Assessing the state of math education in ACEJMC-accredited and non-accredited undergraduate journalism programs. Journalism & Mass Communication Educator, 64(4), 355-377.

Deuze, M. (2006). Global journalism education: A conceptual approach. Journalism Studies, 7(1), 19-34.

Donsbach, W. (2014). Journalism as the new knowledge profession and consequences for journalism education. Journalism, 15(6), 661-677.

Dunwoody, S. (1999). Scientists, journalists, and the meaning of uncertainty. In S. Friedman, S. Dunwoody, & C. L. Rogers (Eds.), Communicating uncertainty: Media coverage of new and controversial science (pp. 59-79). New York, NY: Routledge.

Dunwoody, S. (2014). Science journalism: Prospects in the digital age. In M. Bucchi & B. Trench (Eds.), Routledge handbook of public communication of science and technology (pp. 27-39). New York, NY: Routledge.

Dunwoody, S., & Griffin, R. J. (2013). Statistical reasoning in journalism education. Science Communication, 35(4), 528-538.

Dunwoody, S., & Scott, B. T. (1982). Scientists as mass media sources. Journalism Quarterly, 59(1), 5259.

Fedler, F., Counts, T., Carey, A., & Santana, M. C. (1998). Faculty’s degrees, experience vary with specialty. Journalism & Mass Communication Educator, 53(1), 4-13.

Finberg, H., Krueger, V., & Klinger, L. (2013). State of journalism education 2013. The Poynter Institute for Media Studies. Retrieved from http://www.newsu.org/course_files/StateOfJournalismEducation2013.pdf

Fink, K., & Anderson, C. W. (2015). Data journalism in the United States: Beyond the “usual suspects.” Journalism Studies, 16(4), 467-481.

Folkerts, J. (2014). History of journalism education. Journalism & Communication Monographs, 16(4), 227-299.

Folkerts, J., Hamilton, J. M., & Lemann, N. (2013). Educating journalists: A new plea for the university tradition. New York, NY: Columbia University Graduate School of Journalism.

Griffin, R. J., & Dunwoody, S. (2016). Chair support, faculty entrepreneurship, and the teaching of statistical reasoning to journalism undergraduates in the United States. Journalism, 17(1), 97-118.

Hamilton, J. M. (2014). Journalism education: The view from the provost’s office. Journalism & Mass Communication Educator, 69(3), 289-300.

Highton, J. (1989). ‘Green eyeshade’ profs still live uncomfortably with ‘chi-squares’; Convention reaffirms things haven’t changed much. Journalism & Mass Communication Educator, 44(2), 59-61.

Hijmans, E., Pleijter, A., & Wester, F. (2003). Covering scientific research in Dutch newspapers. Science Communication, 25(2), 153-176.

Kovach, B., & Rosenstiel, T. (2007). The elements of journalism: What newspeople should know and the public should expect (2nd ed.). New York, NY: Three Rivers.

Lewis, M. (1993, April 17). J-school confidential. The New Republic. Retrieved from https://newrepublic.com/article/72485/j-school-confidential

Lippmann, W. (1961). Public opinion. New York, NY: Macmillan. (Original work published 1922).

Livingston, C., & Voakes, P. (2005). Working with numbers and statistics: A handbook for journalists. Mahwah, NJ: Lawrence Erlbaum Associates.

Lynch, D. (2015). Above and beyond: Looking at the future of journalism education. Miami, FL: Knight Foundation. Retrieved from http://www.knightfoundation.org/features/journalism-education

Maier, S. R. (2002). Numbers in the news: A mathematics audit of a daily newspaper. Journalism Studies, 3(4), 507-519.

Maier, S. R. (2003). Numeracy in the newsroom: A case study of mathematical competence and confidence. Journalism & Mass Communication Quarterly, 80(4), 921-936.

Martin, J. D. (2016). A census of statistics requirements at U.S. journalism programs and a model for a “statistics for journalism” course. Journalism & Mass Communication Educator [Online before print]. doi: 10.1177/1077695816679054

Medsger, B. (1996). Winds of change: Challenges confronting journalism education. Arlington, VA: Freedom Forum.

Meyer, P. (2002). Precision journalism: A reporter’s introduction to social science methods (4th ed.). Lanham, MD: Rowman & Littlefield.

Nguyen, A., & Lugo-Ocando, J. (2016). The state of data and statistics in journalism and journalism education: Issues and debates. Journalism, 17(1), 3-17.

Nisbet, M. C., & Fahy, D. (2015). The need for knowledge-based journalism in politicized science debates. The ANNALS of the American Academy of Political and Social Science, 658, 223-234.

Nyhan, B., & Sides, J. (2011). How political science can help journalism (And still let journalists be journalists). The Forum, 9(1). Retrieved from http://www.dartmouth.edu/~nyhan/poli-sci-journalism.pdf

Papper, B. (2016, July 11). RTDNA research: Women and minorities in newsrooms. Radio Television Digital News Association. Retrieved from https://www.rtdna.org/article/rtdna_research_women_and_minorities_in_newsrooms

Patterson, T. E. (2013). Informing the news: The need for knowledge-based journalism. New York, NY: Vintage.

Pellechia, M. G. (1997). Trends in science coverage: A content analysis of three US newspapers. Public Understanding of Science, 6(1), 49-68.

Picard, R. G. (2014). Deficient tutelage: Challenges of contemporary journalism education. Retrieved from http://www.robertpicard.net/files/Picard_deficient_tutelage.pdf

Reese, S. D. (1999). The progressive potential of journalism education: Recasting the academic versus professional debate. The Harvard International Journal of Press/Politics, 4(4), 70-94.

Reese, S. D., & Cohen, J. (2000). Educating for journalism: The professionalism of scholarship. Journalism Studies, 1(2), 213-227.

Rosenstiel, T. (2005). Political polling and the new media culture: A case of more being less. Public Opinion Quarterly, 69(5), 698-715.

Rutenberg, J. (2016, August 7). Trump is testing the norms of objectivity in journalism. The New York Times. Retrieved from http://www.nytimes.com/2016/08/08/business/balance-fairness-and-a-proudly-provocative-presidential-candidate.html?_r=0

Schäfer, M. S. (2012). Taking stock: A meta-analysis of studies on the media’s coverage of science. Public Understanding of Science, 21(6), 650-663.

Singer, E. (1990). A question of accuracy: How journalists and scientists report research on hazards. Journal of Communication, 40(4), 102116.

Stocking, S. H., & Holstein, L. W. (2009). Manufacturing doubt: Journalists’ roles and the construction of ignorance in a scientific controversy. Public Understanding of Science, 18(1), 23-42.

Tankard, J. W., & Ryan, M. (1974). News source perceptions of accuracy of science coverage. Journalism Quarterly, 51(2), 219225.

Wihbey, J. (2016, November). Journalists’ use of knowledge in an online world: Examining reporting habits, sourcing practices and institutional norms. Journalism Practice, 1-16. Retrieved from: http://dx.doi.org/10.1080/17512786.2016.1249004

Willnat, L., & Weaver, D. H. (2014). The American journalist in the digital age: Key findings. Bloomington, IN: Indiana University. Retrieved from http://news.indiana.edu/releases/iu/2014/05/2013-american-journalist-key-findings.pdf

Yarnall, L., Johnson, J. T., Rinne, L., & Ranney, M. A. (2008). How post-secondary journalism educators teach advanced CAR data analysis skills in the digital age. Journalism & Mass Communication Educator, 63(2), 146-164.