Exploring Reporter-Desired Features for an AI-Generated Legislative News Tip Sheet

By Patrick Howe, Christine Robertson, Lindsay Grace and Foaad Khosmood

[Citation: Howe, P., Robertson, C., Grace, L. & Khosmood, F. (2022). Exploring Reporter-Desired Features for an AI-Generated Legislative News Tip Sheet. #ISOJ Journal, 12(1), 17-44]This research concerns the perceived need for and benefits of an algorithmically generated, personalizable tip sheet that could be used by journalists to improve and expand coverage of state legislatures. This study engaged in two research projects to understand if working journalists could make good use of such a tool and, if so, what features and functionalities they would most value within it. This study also explored journalists’ perceptions of the role of such tools in their newswork. In a survey of 193 journalists, nearly all said legislative coverage is important but only 37% said they feel they have the resources to do such coverage now, and 81% said they would improve their coverage if barriers were removed. Respondents valued the ability to receive customizable alerts to news events regarding specific people, issues or legislative actions. A follow-up series of semi-structured interviews with reporters brought forth some concerns on such issues as transparency, trust and timeliness and identified differing normative assumptions on how such a tool should influence their newswork.

This article explores and evaluates a new kind of prototype tool for journalists: an AI-produced tip sheet system called AI4Reporters. In recent years, there have been many developments relating to the use of artificial intelligence in journalism. Purpose–driven tools now exist to help journalists discover potential stories, to help media outlets disseminate stories quickly, and to help audiences discover news stories of value to them and engage with them in novel ways. The tip sheet described in this research is designed to help the field of journalism buttress an important coverage area—public interest news coming out of state legislatures—that, due largely to upheavals in media economics, has been in decline.

In their 2011 article “Computational Journalism,” Cohen, Hamilton and Turner described what they saw as one paradox of the information age: While computers connected via the Internet had made it possible for the public to access seemingly limitless amounts of information, their rise had disrupted one institution that people had depended on to both provide them information about their government and to act as a check upon it.

Journalistic institutions, particularly newspapers, in the digital age have seen both their core mission and their economic model come under threat. Where journalists’ main mission had once been to find information, now their role was increasingly to sift through an abundance of it to find what is relevant and important. Where news companies once offered advertisers convenient access to a local audience, now social media companies offer the ability to micro-target audiences based on the block that they live on and their favorite pastimes, all for less cost. By 2020, daily circulation of newspapers had fallen from a high of $62 million to an estimated $25 million, and advertising revenue had fallen from a high of $49 billion to less than $9 billion (Pew Research Center, 2021).

Cohen, Hamilton and Turner (the latter two expanding on their 2009 work), however, saw a “silver lining” in the situation journalism was facing. If computers and technology had helped create the threat to public–service journalism, then, they argued, computer scientists had an obligation to work to help journalists perform their watchdog role by providing new interfaces, algorithms, and techniques for extracting data. They argued that “for public-interest journalism to thrive, computer scientists and journalists must work together, with each learning elements of the other’s trade” (2011, p. 66).

Cohen and her co–authors saw such efforts as a logical continuation of the role that technology has played in advancing journalism, from the accountability that developed through the ability of journalists to photocopy government documents, to Philip Meyer’s deployment of social–science methods and tools in newsrooms via so–called Precision Journalism, to the use of relational databases and the rise of computer-assisted reporting. Their paper also foreshadowed potential obstacles that included cultural and technological gaps between what they called “computationalists and journalists” (Cohen, Hamilton & Turner, 2011, p. 68).

Lewis and Usher (2013) encouraged a two–way exchange when it came to bridging those gaps. They explored the ways that the ethics of “hackers” engaged in producing open–source journalism tools might influence and change journalistic norms and practices. They noted that tools used in newsrooms had conventionally been critiqued based on their ability to fit into pre-existing journalistic conventions. They called this a “tool–driven normalization” that assumed that traditional methods were the “inherent good” (2013, p. 9). How, they asked, might journalism innovation be increased if normative values of transparency, iteration, tinkering, and participation—represented in open-source hacking—were adopted by newsrooms?

One concrete example of an aspect of public–affairs journalism that has suffered in recent years is coverage of activities at state legislatures. State legislatures pass laws that dramatically affect people’s lives, but the number of statehouse reporters has declined sharply in recent decades (Enda, Matsa, & Boyles, 2014; Shaw, 2017), leading to less coverage overall. Those reporters that remain in statehouses are more likely to be students or assigned only part–time. Resulting legislative coverage is more superficial (Weiss, 2015; Williams, 2017), focusing largely on the final passage of major bills. That’s too late in the process for citizens to offer informed responses to their lawmakers in a way that could influence policies. State legislative officials have tried to fill the gap in coverage by, for example, offering more legislative-produced social media, video offerings, websites and news-like stories (Weiss, 2015), but government watchdogs say more needs to be done to increase coverage of state legislative news (Shaw, 2017).

The government and political reporters who continue to cover state legislators make use of technology including Internet–era tools and live streams (Cournoyer, 2015). But much of their day-to-day reporting activity wouldn’t look much different from 50 years ago. As described in a Pew Research Center report (2014), statehouse reporters spend their time meeting with lobbyists and lawmakers to learn about potential developments of interest, physically attending committee hearings to track bills, and monitoring legislative floor proceedings, which often progress into the night. The same reporters generally also are expected to cover gubernatorial news, political developments, newsworthy developments among state agencies and court proceedings.

The project described in this paper (AI4Reporters) is motivated by the same spirit reflected in those computational journalism articles. The authors of this paper include a former political reporter, a former legislative chief of staff, a computer scientist, and a scholar of interactive media and communication. An underlying motivation for the project is to expand journalistic coverage of local governments, in this case state legislatures, by providing a tool for journalists to summarize for them potentially newsworthy developments happening in legislatures. An additional motivation is to explore how a cross–section of modern journalists respond to the idea of incorporating AI tools into their daily work. Are journalism AI tools still subjected to a “tool-driven normalization,” judged by their conformance with conventional methods of conducting journalism, or are there signs they might serve to disrupt existing workplace patterns and normative assumptions to serve audiences better?

This paper addresses the following questions: 1. What is the perceived need among journalists for an AI–generated legislative news tip sheet such as ours? 2. What features, abilities and functionalities would journalists find most useful in their daily reporting? 3. What do the answers given by journalists in response to questions about such a tip sheet reveal about how these professionals conceptualize the role of journalistic AI technology in their newswork?

We explore these through analysis of an opinion survey taken by 193 journalists and via in–depth semi–structured interviews with 10 journalists who explored artifacts from a working model of our tip sheet.

This research contributes to the field by describing the development of an algorithmically generated legislative news tip sheet designed to be used by journalists to improve their coverage of state legislative actions (called in this article AI4Reporters Tip Sheet). We explore journalists’ perceptions of their need for such a tool and their notions of how they might benefit from it. The findings elaborate on the importance that tools designed by computer scientists for use by journalists consider the specific needs and values held by journalists. It also highlights the varied ways that modern reporters think about what journalistic AI tools might help them do (or not do). This article concludes with discussion of potential impacts of the tool and how the project might proceed, particularly in light of what has been learned from the interviews. Overall, the work aims to introduce people to the potential of this tool and to help others more effectively design computational tools that could support public service-oriented journalism.

Literature Review

This review tackles two areas that inform our work. First, to review related tools that have used aspects of AI to aid in newsgathering. Second, to explore findings from works that have explored how journalistic news values come into play in the design and use of computational systems to aid in news discovery.

AI-Powered Newsgathering Tools

AI tools have already taken on important roles in journalism in the reporting, writing and distribution of stories (Hansen, Roca-Sales, Keegan, & King, 2017). In 2019, Reuters Institute Digital News Report (Nielsen, Newman, Fletcher & Kalogeropoulos, 2019) researchers found that more than three–quarters of news leaders interviewed think it is important to invest more in Artificial Intelligence (AI) to help secure the future of journalism—but not as an alternative to employing more editors. Most news leaders (73%) saw increased personalization as a critical pathway to the future, according to the report (Nielsen, Newman, Fletcher & Kalogeropoulos, 2019).

News organizations use AI to automatically generate thousands of stories per year in genres such as financial, sports and weather, and this increased coverage has had effects. After The Associated Press partnered with Automated Insights to use AI to generate stories from corporate earnings reports—effectively expanding its corporate earnings coverage by 2,400 companies—investor interest, trading volume and stock prices related to the newly-covered companies increased (Blankespoor, deHaan & Zhu, 2018). One example of AI being used to help with legislative coverage is The Atlanta Journal-Constitution deploying a predictive model to describe a bill’s chances of passage through the legislature (Ernsthausen, 2014). News organizations generally, however, have not had success fully automating the writing of news stories in genres such as governmental reporting that depend on subjective judgment and for which there are no neatly standardized data sets available as algorithmic inputs (Hall, 2018).

Diakopoulos (2019) makes the case that much of the potential for algorithmic journalism lies in hybridization, in which human reporters use machines to help with tasks such as prioritizing, classifying, associating and filtering information (p. 19). He notes that computers are a long way from being able to do the sort of complex tasks listed above and thus researchers might productively focus on areas where computers can augment, rather than replace, the work of human reporters. He offers the example of Swedish sports site Klackspark, which relies on AI to both write short game summaries and to alert human reporters to newsworthy events within them so that they can conduct additional reporting.

Other AI-Journalism tools have included FactWatcher (Hassan et al., 2014), which aims to help journalists identify facts that might serve as leads for news stories, The City Beat tool (this and the immediately following tools described by Trielli & Diakopoulos, 2019), which aimed to let journalists know about local events in New York City, and the Tracer system, which searched Twitter to detect potentially newsworthy events. Local News Engine employed algorithms to gather and sift through government data in the United Kingdom to look for leads. RADAR, which stands for Reporters and Data and Robots, offers thousands of stories per month that are run by UK–based media outlets that subscribe to its wire service. RADAR data journalists figure out angles for stories and then create data-driven templates with rules for how to localize the stories. Each journalist can produce 200 “local” stories per template they create.

Perhaps most similar to the tool discussed in this article, another AI instrument that has recently come to be used in news gathering is the Lead Locator (WashPostPR, 2020). Used by The Washington Post during its 2020 election coverage, the Lead Locator used machine learning to generate a tip sheet for reporters that would analyze voter data from state and county level and point them to potentially interesting anomalies and outliers in the data.

Journalistic News Values and AI

Diakopoulos and others have recently begun discussing the various types of projects described above as computational news discovery (CND). The work discussed in this article logically fits within this framework, which is defined as “the use of algorithms to orient editorial attention to potentially newsworthy events or information prior to publication” (Diakopoulos, Trielli & Lee, 2021). More broadly, this research falls within the field of Human-Computer Interaction (HCI), with particular attention to such works that emphasize the need to focus on human-centered technologies (Riedl, 2019), and works that emphasize the importance of designing systems that work within specific value frameworks (e.g., Friedman & Khan, 2006; Shilton, 2018).

Academics and computer scientists who seek to help journalists should understand the values that reporters bring to their work. If the tool is aimed at assisting with news discovery, there’s a clear need to understand concepts of news, newsworthiness and other values of the newsroom. Analysis of previous journalist–computer science partnerships has uncovered tension over news values (Diakopoulos, 2020).

News values are those things that help reporters decide what is news. In an update to their earlier work, Harcup and O’Neill (2016) found that information considered for potential publication in news stories generally included one or more of the following characteristics: exclusivity, bad news, conflict, surprise, arresting audio or video, shareability, entertainment, drama, relevance, discussion of the power elite, magnitude, celebrity, good news, or stories that fit a news organization’s agenda. In addition to norms about what news is, journalists share norms about how news should be gathered and about what principles should guide them in the process. Kovach and Rosenstiel (2021) distilled both of these concepts down to 10 “elements of journalism.” Among these ideas are that journalists have an obligation to the truth, that they should put the public interest above their own, that information they report must be verifiable, that journalists should be independent from the influences of their sources, and that journalism should monitor power.

McClure Haughey, Muralikumar, Wood and Starbird (2020) explored how journalists used technology to investigate and report on misinformation and disinformation. After analyzing in-depth interviews with 12 journalists, they offered suggestions on how academics might better support journalists. For example, they found that, while researchers were inclined to build advanced analytical tools to see trends in big data, the journalists said they wanted help tracking specific bad actors who were posting in chatrooms, on Discord servers and on social media. In other words, the reporters saw their work as more akin to ethnography than data science. Turning to values, the authors found that the journalists were interested in the journalistic value of verifying sources, which in the data world could be translated as establishing the credibility of the data used. Journalists, they found, wanted to know exactly how the data was collected, processed and analyzed.

Diakopoulos has multiple works that explore elements of news values within computational news discovery tools. Interviews with journalists who had used CND systems (2020) emphasized that CND tools should work with journalistic evaluations of newsworthiness and quality, and also be designed for flexibility and configurability. In an assessment of a tool designed to spot algorithms used in government decision-making, he and coauthors Trielli and Lee (2021) found journalists were not satisfied with crowdsourced (by non-journalists) judgments of newsworthiness. Milosavljevic and Vobic (2019) conducted a series of semi-structured interviews with journalists from leading British and German news organizations on the topic of “automation novelties” (p. 16) and found that, while views were in a state of flux, the process of coming to terms with change ultimately had reinforced rather than upended certain journalistic hegemonic belief systems, particularly around the ideals of objectivity, autonomy and timeliness. Most notably, they found a consensus that any automation must exist in a hybrid state that would continue to keep humans in the loop.

And Posetti (2018) found that the news industry has a focus problem, pursuing innovation such as AR and AI for innovation’s sake and that this has, among other things, risked burnout in the newsroom. Posetti quotes journalist-turned-academic Aaron Pilhofer as discussing journalists’ tendency to judge new innovations by using their “tummy compass: if it feels like the right thing to do, it must be” (2018, p. 18) One clear theme from the report was a need to make audience needs a main focus of innovation.

Furthermore, Lin and Lewis (2021) expand on this conceptualization of what journalistic AI should be. They suggest there ought to be a balance between journalists’ wants and audience needs, arguing that any journalistic AI tools should be designed with certain normative ideals in mind, namely those in service of accuracy, accessibility, diversity, relevance, and timeliness. Timeliness, for example, may be exemplified by a tool saving a reporter time in producing certain parts of a story but then seeing that time spent elsewhere on more deliberative aspects in service of the audience.

AI News Tip Sheet Project

Previous Work

The researchers of this article had earlier created Digital Democracy (Blakeslee et al., 2015), a free online resource that allowed users to search and examine transcripts of videos from the California legislature. While interesting studies were produced (Latner et al., 2017), that system was judged to be not impactful, as it required engaged and motivated citizens to proactively visit the website and use the tools.

So the team developed a system that produced AI-generated text summaries that could be offered to journalists. The idea was to use the same transcripts and other data from the Digital Democracy system to produce a short article. So-called algorithmic journalism has been in use in the industry for over a decade, but basically confined to generating sports, weather and certain financial news articles. This was among the first to be used for politics or government. A survey–based study (Klimashevskaia et al., 2021) of the AI-generated content showed the accuracy and usefulness of the summaries but raised concerns regarding the completeness of coverage. Early feedback also indicated journalists would rather be given the primary sources and the underlying information to form their own stories rather than modify or augment auto-generated ones. Attention turned to the news tip sheet concept.

System Design

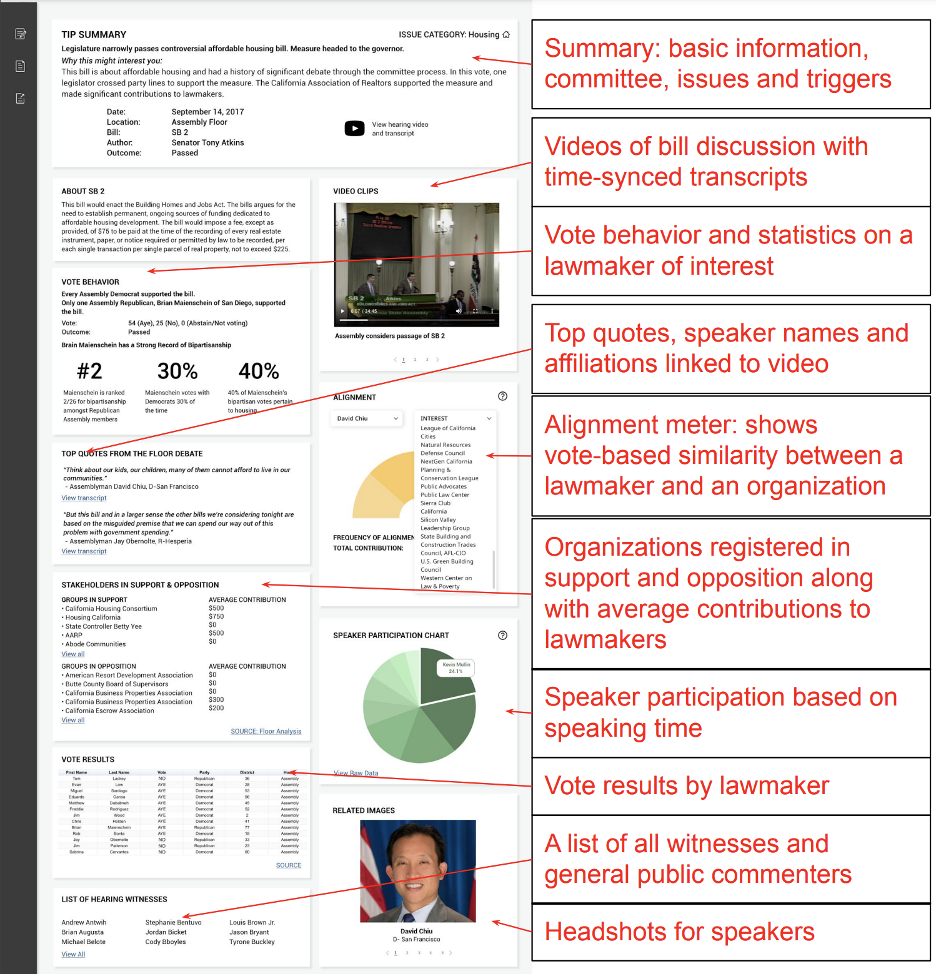

The AI4Reporters approach is an AI system that automatically generates stories and manages electronic “tip sheets” based on recent legislative events and data. Specifically, tip sheets contain vital information about a recent bill discussion (a portion of a committee hearing, or floor session dedicated to a specific bill). The idea is to try to recreate notes taken by an informed reporter who was there in person when the bill discussion took place. Each tip sheet appears like a single interactive web page accessible from the reporters’ dashboard on the AI4Reporters portal. The page includes basic information like committee composition, date, vote outcomes, link to bill texts, amendments, and video recording with time-synced professional–grade transcripts. Top quotable utterances are mined from the transcript and displayed together with the identity and affiliation of the speaker for possible use for the reporter. Quotes are suggested based on basic criteria such as complete, grammatically correct statements made without anaphora containing topic words. They are displayed together with the name of the speaker and are linked directly to the section of the video from which they are transcribed to allow reporters to investigate the context. There is also background information on lawmakers, such as donation amounts, percentage party alignments, list of top donors along with associated average donation amounts.

Data visualizations are rendered generally on the right column. These are derived charts and graphs based for example on speaker participation in terms of number of words or duration of time. The “alignment meter” visualization tool lets the user display an alignment score between any of the lawmakers involved in the bill discussion and any of the organizations that our system tracks. The score is based on a comparison of votes with officially submitted written positions of the organization which in California are public records available from the legislative analyst’s report on the bill. The tip sheet summary box near the top of the page, includes a section on “why you may be interested” in this discussion. Here, the system surfaces results of statistically anomalous triggers that AI4Reporters system automatically looks for in every bill discussion. A sentence is produced describing the trigger, if it is found. Examples of triggers include a close vote, a member who breaks ranks with their own party on a vote, a nontrivial “back and forth” exchange indicative of an argument between a lawmaker and another person, an unusually high number of witnesses testimonies. Many more triggers are possible, and the system can be constantly updated to include new and interesting observation patterns that can be checked. Triggers, however, are not required, as the system generates a tip sheet for every bill discussion regardless of any unusual events that may have occurred. Once generated, the system allows users to select tip sheets based on the subject, committee, lawmaker, keywords or geographical location that may have come up in the discussion.

Figure 1 A Screenshot of an AI4Reporters Tip Sheet along with a Description of each Element.

This system has been designed, and a prototype produced, based on older data from the Digital Democracy database (2014-2018), containing legislative information from California (four years) as well as Florida, Texas and New York (two years each). The goal is to resume live data gathering and launch the production version of the tool once we show viability and demand for the systems.

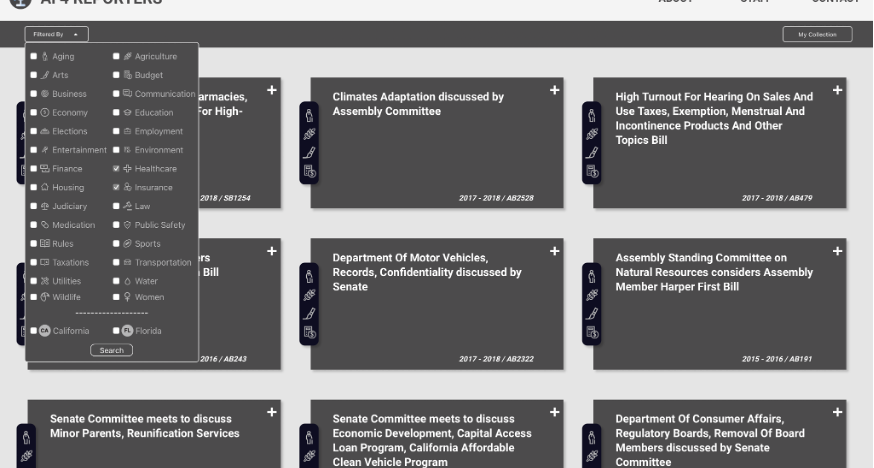

Workflow

The general workflow of the system is as follows: First, a reporter logs on to the system by navigating to the website using a standard browser. Once logged in, the reporter is shown a dashboard with various “cards,” such as the ones shown in Figure 2, on display. Every card has something resembling a headline describing a particular tip sheet. The cards also display other metadata such as dates, state and bill information. The dashboard has filtering based on topic and also keyword searching capability from its main navigation bar. Applying filters or searching for keywords will constrain the cards that appear on the dashboard. Every card can be clicked and upon clicking will take the user to a full tip sheet (see previous Figure 1).

Customization

The system allows for customization so that only tip sheets of interest are shown to a user. From a settings screen accessible from the main dashboard, the user can select basic information such as state, county, issues (keywords) and lawmakers. After setting these options, the system automatically defaults to showing only cards that satisfy the reporter’s constraints. For example, a California reporter may be interested in water and their hometown assembly member. By setting the state (California) and topic (water), as well as county, the system automatically shows the reporter California tip sheets either about water or ones that describe some activity from the county’s assembly members or senator.

Figure 2 Topic-based Filters Available in the Tip Sheet

This study examines perceptions of utility regarding the project’s AI-generated legislative news tip sheets. It does so through two research endeavors. The first was a survey of 193 journalists and the second was a series of hour-long, semi-structured interviews with working journalists. The overarching goal was to better understand how the designed system meets the needs and expectations of news organizations.

Method

Survey Instrument

The team created a master list of targets for the survey. The largest source was from a commercial tool called Cision that has a large database of U.S. media professionals. Additional lists were compiled from attendees of a Knight Foundation–funded conference (Newslab, 2020) and from a list of Florida–based journalism field contacts made available form one of the author’s universities. After pruning duplicates, invalid addresses and opt–out requests, the final list was 4,516 addresses, 97% of which came from the Cision list; 4,296 were considered successfully delivered. Only fully completed surveys are included in this analysis; there were 193, representing 4.5% of the list. Participants received no compensation for completing the survey.

The primary aims of this questionnaire were to identify current trends in statehouse coverage, barriers to coverage, and priorities for state-level news reporting. The survey also aimed to determine which features in the completed system were of the highest interest.

Semi-Structured Interviews

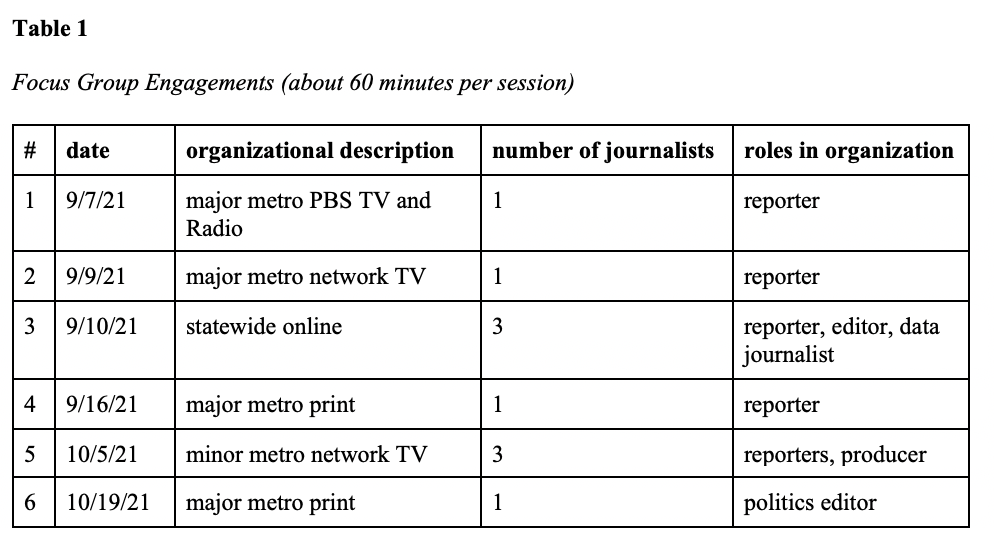

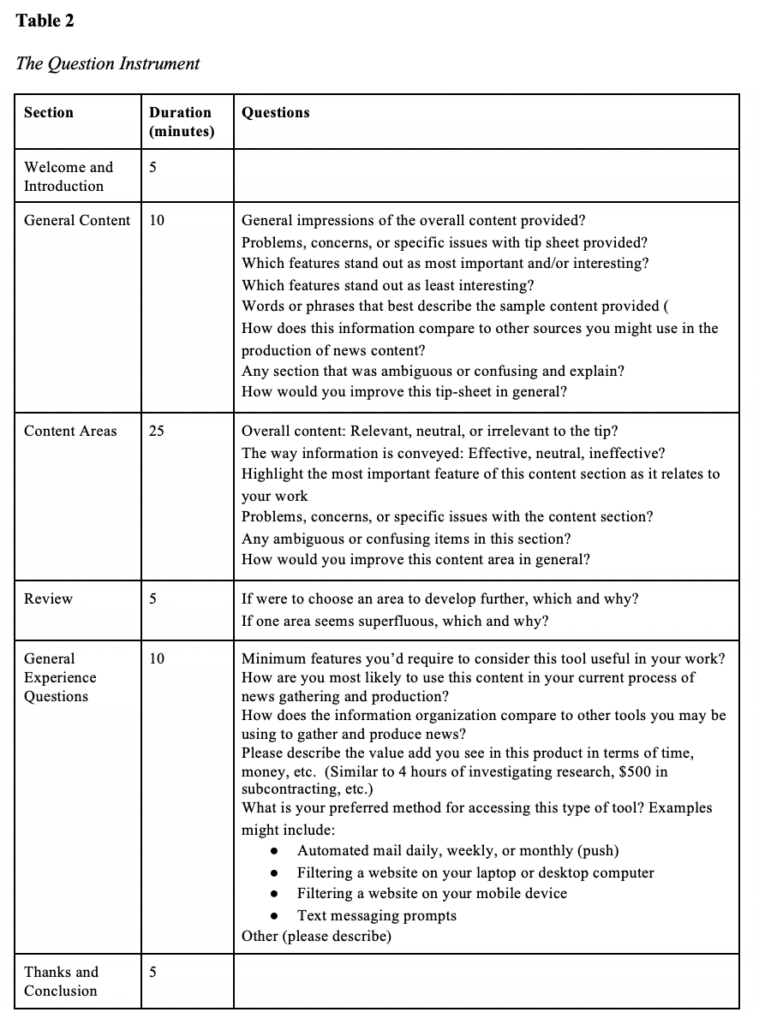

More nuanced perceptions of utility were gathered from an analysis of semi–structured in–depth interviews with reporters with varying experience covering the state legislature in California. While time–consuming, labor intensive and often reliant on small sample sizes, the use of semi-structured interviews is powerful in that it allows for open-ended responses that can help guide formative development of a project or highlight unforeseen issues (Adams, 2015). As the demo material for the automated tool was specific to California’s legislature, the team chose to only engage journalists familiar with California politics.

Six engagements involving 10 journalists were held in September and October 2021. Nine had already participated in the survey and indicated interest in further discussions about the tool. In addition to familiarity with California government, interviewees were selected to represent a cross–section of platforms (print/digital, television and radio), outlet size and audience reach (smaller and larger outlets in minor and major metropolitan areas), and position in their newsroom (junior– and senior–level). Each engagement was about 60 minutes in length and was conducted over video conference sessions. Typically, three individuals affiliated with the present study participated: one served as main presenter, one as official note taker, and the lead information architect was there to take notes and answer questions. The presentation was made entirely by one interlocutor while the others mainly took notes and answered specific questions. The participants were seven reporters, two editors and one television producer. The group was invited via email. Each journalist was offered a $100 incentive for the interview. However, only one person returned the form necessary for the payment to be sent, and the team believes the incentive was a non-factor.

The interviewees came from backgrounds including a major–market television station, a local television station, a public radio station, a metro newspaper and a non–profit news agency based at the state Capitol. All were shown a mock–up of the dashboard design and given examples of the results the model could generate. For the interviews, our primary objectives were as follows:

- Generally, would the reporters find the tool as modeled useful to their jobs?

- What abilities and features would be most valuable to them in a tip sheet tool?

- How would they like data conveyed, presented and delivered?

- How specifically would they envision using the tool in their daily reporting?

Table 1 lists the six interview sessions held while Table 2 provides an outline of each interview with predefined questions. Within each session, the conversation was not strictly controlled, and interviewees were free to focus on a single area or take the discussion in different directions. In analysis, all interviews were transcribed, and the authors analyzed the data for themes, followed by a coding process initially guided by the objectives listed above. This analysis also involved an adjective frequency analysis on the transcripts of all the sessions to better understand the overall sentiments expressed in the sessions. The method is a list of frequently used adjectives used in all discussions derived using a Python script with NLTK library part-of-speech tagging and the results. An additional round of thematic analysis and coding that was conducted to address the third research question focused on how the journalists conceptualize the role of journalistic AI in their newswork.

Results

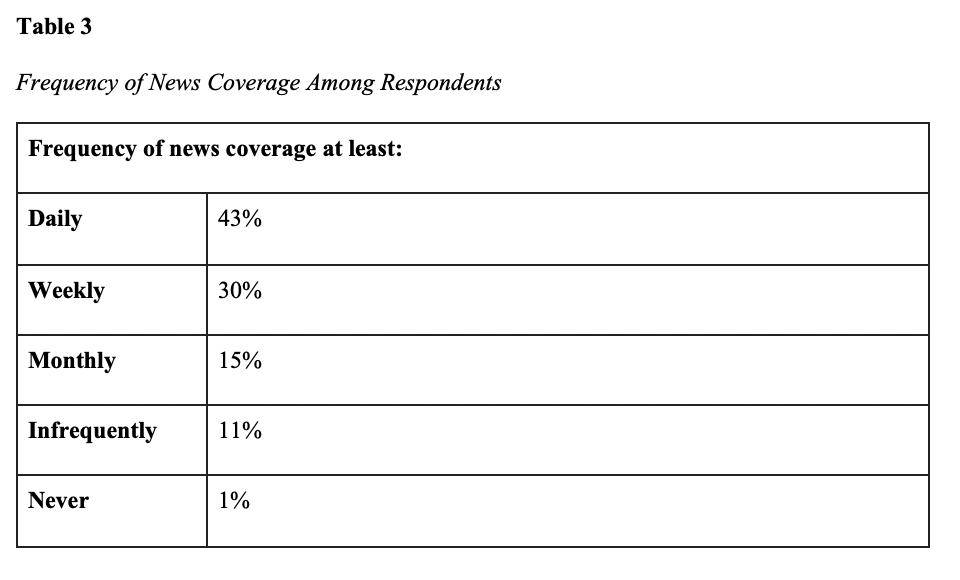

One hundred ninety–three news professionals completed the survey instrument. Respondents were asked to communicate from the perspective of their current employment roles and responsibilities. They self-identified primarily as editors (45%) and reporters (36%), and worked primarily for print or web-based news outlets (80%), with most of the rest in radio (11%) and television (8%). The bulk—46% of the respondents—worked in media outlets of less than five employees, while large news organizations of 51-99 employees (3%) and 100 or more employees (3%) were the least represented. Some 73% of the respondents indicated they cover statehouse news at least weekly. Only 1% never cover statehouse news. Table 3 highlights the pattern of statehouse coverage noted by all respondents.

The primary audience for respondent news outlets was local news at 50%. The next wider audience, regional news, was served by 24% of the audience. Statewide–focused news organizations represented 15% of the responses, and 7% of all were responses from nationally–focused news sources.

Eleven states are represented in the survey: Arizona, California, Colorado, Florida. Georgia, Illinois, New York, North Carolina, Oklahoma, Pennsylvania, and Texas. The number of respondents was greatest from California (59), Florida (34) and Texas (22). This is likely a product of the researchers’ networks, which are based in California and Florida. It should not be interpreted as regional interest in artificial intelligence or allied solutions to improve statehouse coverage.

Respondents were asked to identify the importance of statehouse coverage as either critical, important, or not at all important. Unsurprisingly, 98.8% of respondents indicated that it is “important to cover policies and politics unfolding in state legislatures.” Within that, 80% identified such coverage as “critical,” while 1.2% identified it as not at all important. The survey provided these responses as mutually exclusive, so respondents could only choose one or the other.

From their responses, a minority (37%) of news organizations “feel well-resourced to cover the issues and events connected to a state legislature.” The barriers to statehouse coverage abound. Respondents were asked to rank order the most common barriers to covering state news. When given a list of likely barriers to statehouse coverage, the most common response, “insufficient resources to research & report on newsworthy events, even if I am aware of a newsworthy event” was shared by 38% of the studied group. The next most common barrier, shared by 28% of the respondents, was “geographic distance—lack of access to proceedings.” The third most common barrier, “insufficient resources to track and identify newsworthy events,” challenged 19% of these news professionals.

The list of other challenges included: statehouse policies and politics are not of interest to my audience (6%), available wire service articles are not relevant to my audience (3%), and there are no limiting factors (<1%).

Some respondents offered other challenges as free-form responses or as added commentary to their sorted list. Of those who identified other barriers, the most common response was that all list barriers apply or confound their challenges. Others indicated missing resources such as “the wire service is huge too, we used to subscribe to CTNS but that went away” (respondent 83). While others addressed systemic issues such as staffing shortages or “state administration’s contempt for journalism, open government, and transparency” (respondent 154). General sentiments of note for this research emphasized the limitations of reduced staffing or having a single reporter in a statehouse.

Critical observations offered as barriers also included focus: One noted “we are a national publication and do not routinely focus on covering state legislatures” (respondent 191) and another offered “we are a very local paper and do not report on state or national issues” (respondent 166). These responses, while rare, hint at audiences that might see less benefit for the tip sheet. Others note that news discovery would shape their interest in statehouse reporting (e.g., identifying national trends reflected in a state legislature or hyper–local news shaped by the state legislature).

Given a Likert scale between very likely and very unlikely, 81% of the participants indicated they would increase state legislature coverage if these barriers were removed. Some 36% were very likely to increase coverage, 45% were likely, 1% were unsure, 5% were unlikely and 1% were very unlikely.

When prompted to choose from a list of six topics respondents were most likely to cover about state legislatures, 92% indicated likelihood of covering “topics of local/regional importance” (e.g., “Proposed law could mean more water for Central Valley farmers”). Likewise, 82% were likely or very likely to cover “topics related to a specific policy area” (e.g., “State proposes changes to K12 curriculum standards”). And 79% were likely or very likely to cover “topics of potential statewide importance” (e.g., “Florida budget vote fails”). Other options, such as “Topics of potential national importance” (e.g., “CA votes to become a sanctuary state”) and “Topics related to the people who participate in the legislative process” (e.g., “Local dealership owner testifies in Sacramento”) retained majority interest, at 68% and 54% respectively. The least likely or highly unlikely topic was “Topics related to party politics” (e.g., “Republicans break ranks to support tax measure”) at 41%.

To help answer RQ1 and RQ2, the following section explains the specific features that respondents stated that would best support newsrooms.

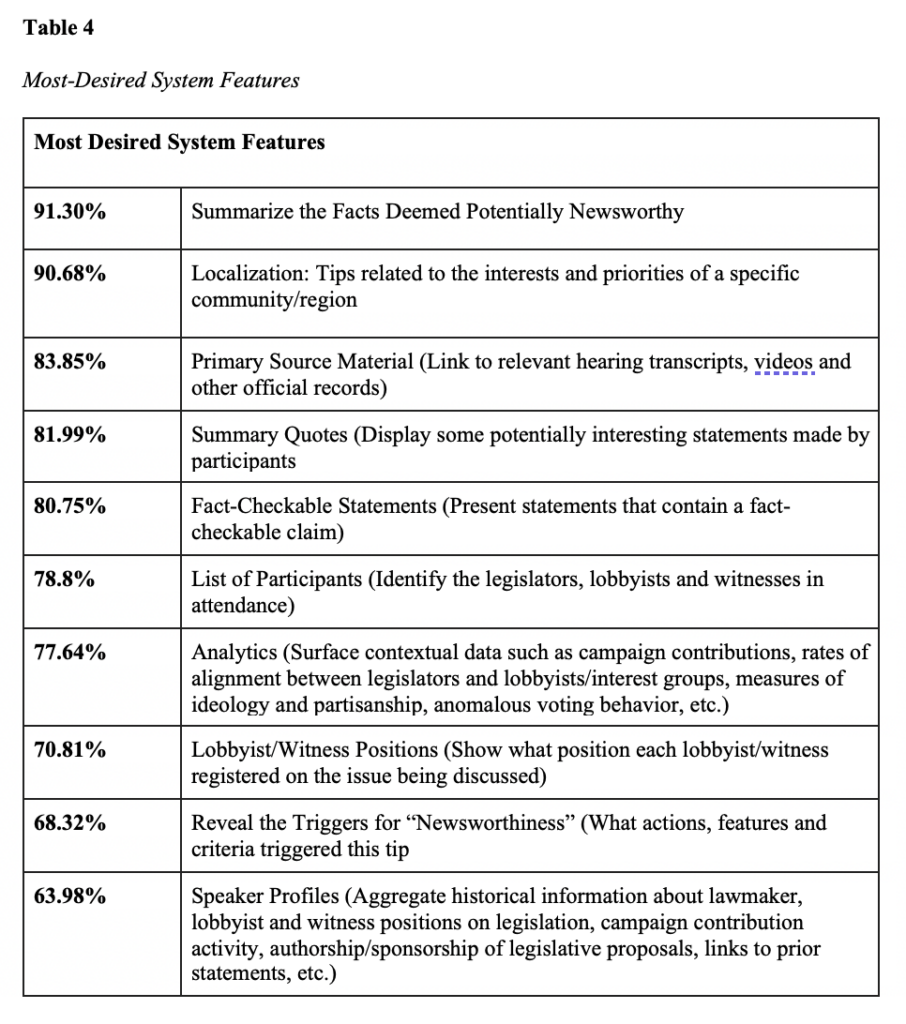

This content, derived from existing or planned features, was evaluated on a Likert scale from very valuable to not valuable with a “neutral” option at the midpoint of the 5-point scale. The most desired content items are shown in Table 4, sorted by percentage responses at or above valuable:

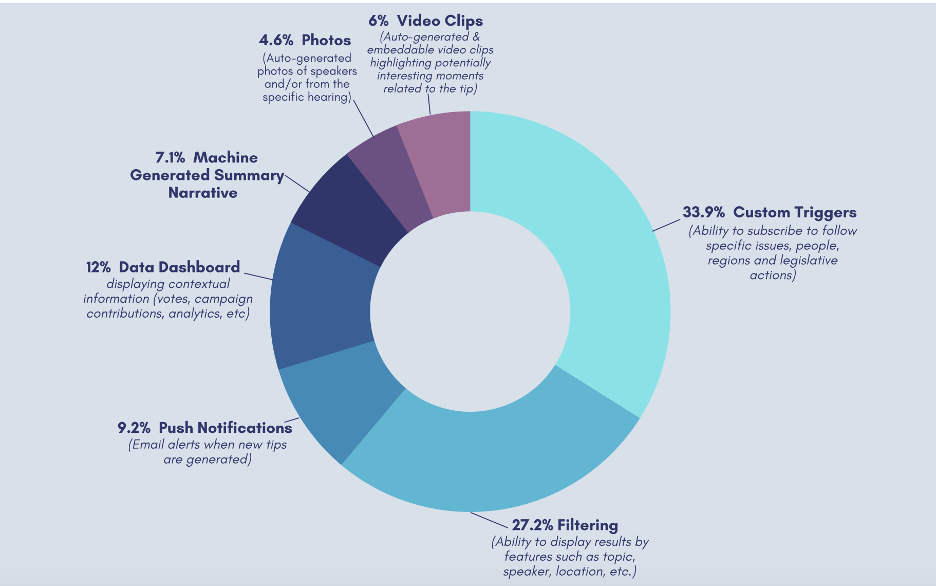

The respondents were also asked to identify specific features implemented as part of the user experience. Such items include automatically–generated video clips, receiving email notifications when new tips are generated, filtering content by user generated criteria, etc. These features are generally common to such systems.

For user experience items, preferences primarily aimed toward content discovery and search. The most valued feature, based on a 5-point Likert scale of very valuable to not–at–all valuable, was customized triggers that allow users to subscribe and follow specific issues, people, regions and legislative actions. The second most valued trigger was filtering based on attributes like speaker, location or topic. Notably, multimedia aggregation such as photos and videos ranked relatively low in the preference at 6% and 4.6% respectively.

Figure 3 illustrates the complete survey results for user experience features:

Figure 3 Desired User Features (Ranked ‘Valuable’ and ‘Very Valuable)

Finally, 91% of the respondents indicated that they were likely or very likely to use a system that offered these features and functions as listed. Interest is also emphasized by the 122 respondents who requested early access to the beta version of the tool.

Interview Findings

As part of RQ1 and RQ2 findings, perceptions of usefulness of the tip sheet were positive in every case. Most reporters expressed excitement and surprise that such a tool could be a reality. Some described the inefficient and largely manual process they use to track legislative activities. Some aspects of the tip sheet were appreciated more by some reporters depending on their medium.

As one reporter said:

“This would be an efficient way to do better to serve our communities like we claim we want to do with more than just click bait. These are stories that change lives, and if we’re not reporting them, who will?”

To the question of how they would like to receive alerts, most expressed dissatisfaction with email-based communication and preferred a web-based portal, dashboard or app with in-software alerting capability (such as text messages) as their method of interaction.

Regarding exactly how they would use the tool, answers again varied based on the reporters’ legislative knowledge. Two Capitol-based journalists, for example, were enthusiastic about a tool highlighting relationships between lawmakers and lobbyist donations, while one general assignment reporter said she would be unlikely to use that information in daily news reports.

Concerns centered around areas of transparency, trust, and timeliness. For example, several reporters wanted to understand how the algorithm came to use the word “controversial” in a headline describing one bill’s passage. Several also voiced concerns about how to attribute the source of the information, the tip sheet, in their reports. Another concern centered around timeliness, in that any delay in receiving alerts could decrease the information’s newsworthiness.

One senior editor expressed some concern about non-neutral language in the descriptions pointing out that younger, less experienced reporters are likely to take these auto–generated descriptions and use them without verification. The same editor also said simple statistics such as “lawmaker participation score” as measured partly by the length of a legislator’s speech is not terribly useful as there are many subjective qualities to such judgements.

As was indicated in the survey responses, journalists expressed interest in robust customization options. A television reporter, for example, said they would like to be able to get tips about upcoming actions for planning purposes.

Asked “How would this help you,” one San Francisco–based newspaper reporter answered: “Both [in terms of] depth and breadth. For investigative pieces, it would help connect the dots. [It] also makes it easier to [do] more.”

The authors also analyzed the six interview sessions using adjective frequency analysis. These efforts support the findings from the qualitative analysis, with the words “helpful,” “interesting,” “useful,” “local,” “great,” and “good,” appearing as among the most frequently used adjectives in the interviews. In terms of concerns raised, one of the tip sheet examples used the word “controversial” as a description of a vote, and several journalists said they found that description difficult to justify using algorithms. The general feedback was that we should try to stay value neutral on descriptions.

Turning to the third research question of how journalists conceptualize the tool, we found a wide variety of normative assumptions implicit in the interviews. One reporter, for a Los Angeles–based television station, expected any such tool to conform to prescribed methods of coverage she already employed. Shown an example artifact and asked her general impressions, she immediately focused on the precise ways that she might make use of it by, for example, using a headshot of a lawmaker and a quote from the summary, in a short television report. She noted that her station would not make use of legislative video unless it were unusually exciting. She described a sample one–page summary of a proceeding as “a firehose of information,” and said that a feature that would offer an alignment meter—a visualization of synchronicity between an interest group’s interests and a lawmaker’s past votes—would not be of use to her: “I only have 90 seconds; it won’t make it in.” Broadly, she expressed that what the tool should offer is the ability to do more work of exactly the type she already does, faster and easier. She did not conceptualize the tool as something that might expand her vision and serve her audience differently or better.

Reporters and an editor for a smaller-market station, however, were more interested in using the tool to expand both the nature and the content of their coverage. Two journalists appreciated being able to discover new stories for themselves. They conveyed that currently the way they are alerted to a potentially newsworthy event in the legislature, is through press releases from the legislators themselves. A producer said that, while some summary information in the tool tip would not likely make it into a broadcast piece, it would be useful in letting them offer audiences more depth in a digital story. Continuing down the spectrum of how the tool might be employed, an editor and reporter with a legislative–based newsroom focused almost entirely on how the tool might be best used to unveil more complex patterns and relationships between money and power. They suggested new approaches and news products that could be offered based on the tool. Overall, they evidenced a view that the tool should be used to offer audiences greater clarity to how the legislature operates.

Discussion

AI4Reporters is an AI system that automatically generates news “tip sheets” generated in response to triggers that are based on analyzing legislative transcripts and other sources for bill information and campaign donations. The authors tested perceptions of the demand for, and utility of, the system via a survey of 193 journalists and semi–structured interviews with 10. The response was positive. Nearly all said coverage of legislatures is important, but only 37% said they feel like they have the resources to do such coverage. More than 80% said they would increase their coverage if barriers such as geographic distance, lack of ability to track newsworthy events, and insufficient access to relevant research, were removed. In the interviews, they were pleased by the tool and expressed enthusiasm for using it, but voiced concerns in the areas of trust, transparency, and timeliness.

It is obvious from the survey responses that there is a fundamental level of interest in the sort of assistance that AI tools could offer journalists with respect to their legislative coverage. It is also evident that survey participants want custom solutions. The responses underscore the obvious interest in user experience and user interface elements that support personalization and customization. They also hint at the challenge of designing a one–size–fits all solution.

This challenge to create bespoke software is also highlighted in the unique needs of a relatively small subset of users. As evidenced in the types of news organizations surveyed, staffing limitations at the many small organizations focusing on regional and local news are particularly interested in solutions that help increase their capacities. The responses show limited reticence for using the tool, but instead hint at ambitions to expand the news reporting capacities of these organizations. The interviews also supported this finding. While one might have assumed that larger outlets would be more interested in expanding coverage, this analysis finds equal or greater appetite for doing more public service–oriented journalism in smaller organizations.

Interestingly, the patterns seem to indicate a primary interest in news discovery, primary source parsing, and work that in concept expands the observational capacities of these news organizations. In other words, they seem to be affirming that they would increase reporting activity more if they were supported by tools that increase that capacity.

Since the specific focus of these organizations varies not only with their news audience, but with the topical focus of their publications and broadcasts, it is unsurprising that each wants a unique lens to specific elements of statehouse proceedings. While the survey is limited, it hints at the diverse needs of these organizations and their employees.

In terms of content, demand is clearest for summaries of relevant information that is localized and directly linked to primary source material. In short, journalists continue to want to do the work of journalism, maintaining the rigor of verified, independent and accountable work. They see the value of such systems as a means of supporting the traditional work of journalism.

Turning to evident normative assumptions, the authors find a variety evident in the interviews: Some reporters conceptualized the tool as one that ought to merely help them do their current work faster, while others jumped immediately to ways the tool could expand their coverage ambitions as well as their news product offerings to serve audiences better. This trend seemed to be independent of outlet size or medium. Future research might productively explore such connections. The authors have continued to iterate the tool’s options and offerings based on the feedback from the survey and interview results. For example, the tip sheet will not characterize votes as “controversial.”

Notably, although software systems are uniquely proficient at creating analytics or determining wide scale patterns, the appetite for such services is less strong. It is not clear if this is due to journalistic standards or the result of discomfort, distrust or other reticence. It is reasonable that in the age of algorithms, news professionals would rather do the pattern identification and calculations themselves. While this a wide generalization for a limited study, it is worth noting this preference as the community of AI designers and developers seeks to support journalism. From this survey, it might be assumed that the ask for such an AI system may simply start with an old refrain: just the facts, for now.

Limitations of Study Design

This research had some limitations. As is the case with any such study, it would be more beneficial to survey a wider audience or to interview more reporters. As to the applicability of the findings, respondents gave their impressions to prompts about what to them was an entirely hypothetical tool. The journalists who were interviewed saw some results generated from a working prototype, but they did not personally interact with the tool themselves. Thus, the authors were not able to collect data that reflects how people would actually use the tool in a real-world application.

Any conclusions regarding the impact of being able to personalize or customize tip sheet results should be considered subject to this same limitation: Respondents in these studies did not actually personalize the data themselves and get results. One obvious direction for further research would be gathering feedback from journalists after they test a working model of the tip sheet for themselves.

Conclusions

The potential for algorithmic journalism was envisioned for weather stories as far back as 1970 (Glahn), and in its ideal form carries obvious benefits in terms of speed, scale, accuracy, lack of subjectivity and capacity for personalization (Graefe, 2016). Given all of this, Ramo (2021) wonders why we aren’t encountering more explicit examples of algorithmically–generated news in our daily lives and concludes it’s because trust issues remain. In a 2020 meta-analysis of 11 peer-reviewed journals, Graefe and Bohlken found that people deemed reports that they thought were generated by humans (whether in fact they were created by humans or algorithms) as more readable, of higher quality, and of similar credibility.

Diakopoulos (2019) argues for the use of hybridized systems that rely on algorithms to do work they do well but to then turn those results over to humans to use their subjective judgment and expertise to craft final stories. AI4Reporters seems to fit this model. Use of customizable tip sheets could lead to more coverage of state legislative proceedings and votes, and that increased coverage could lead to more citizen interest and involvement in legislative actions. For now, the human journalists are needed regardless, but even if or when they are not needed, they may still be preferred by news consumers. The authors are designing a tool for journalists, but the main goal is to serve the public with better access to legislative news that impacts their lives. Time is the most limiting factor in a newsroom. A tool that allows journalists to save time in one way, by more quickly producing a story they would have already done, is not expanding public service journalism if that time is not then spent on doing additional or more nuanced public service coverage. There is reason for optimism in this study: Not all, but many of the journalists surveyed, evidenced views that our journalist AI tool would help them do more and better coverage.

Concerns around algorithmically–generated news center on its potential to cost human jobs, on credibility concerns, on the fairness and accuracy of reports, and on accountability. Projects such as the one tested, that help humans do the job of journalism in a critically needed subject area faster and better but keep reporters and editors as final crafters and decision–makers, have promise in serving both journalism and democracy.

References

Adams, W. C. (2015). Conducting semi-structured interviews. Handbook of practical program evaluation, 4, 492–505.

Blakeslee, S., Dekhtyar, A., Khosmood, F., Kurfess, F., Kuboi, T., Poschman, H., & Durst, S. (2015). Digital Democracy Project: Making government more transparent one video at a time. Digital Humanities 2015 Poster and Demo presentations.

Blankespoor, E., deHaan, E., & Zhu, C. (2018). Capital market effects of media synthesis and dissemination: Evidence from robo-journalism. Review of Accounting Studies, 23(1), 1–36.

Cohen, S., Hamilton, J. T., & Turner, F. (2011). Computational journalism. Communications of the ACM, 54(10), 66–71.

Cournoyer, C. (2015, March 1). Bright spots in the sad state of statehouse reporting. Governing. https://www.governing.com/archive/gov-statehouse-reporting-analysis-lou.html

Diakopoulos, N. (2019). Automating the news: How algorithms are rewriting the media. Harvard University Press.

Diakopoulos, N. (2020). Computational news discovery: Towards design considerations for editorial orientation algorithms in journalism. Digital Journalism, 8(7), 945–967.

Diakopoulos, N., Trielli, D., & Lee, G. (2021). Towards understanding and supporting journalistic practices using semi-automated news discovery tools. Proceedings of the ACM on Human-Computer Interaction, 5(CSCW2), 1–30.

Enda, J., Matsa, K. E., & Boyles, J. L. (2014). America’s shifting statehouse press: Can new players compensate for lost legacy reporters? Pew Research Center. https://www.pewresearch.org/journalism/2014/07/10/americas-shifting-statehouse-press/

Ernsthausen, J. (2014). What are the odds? New AJC app calculates chances Georgia bills will become law. The Atlanta Journal–Constitution. https://www.ajc.com/news/what-are-the-odds/ElDlnwnS4ATFOAaLiwUfYJ/

Friedman, B., Kahn Jr, P. H., Hagman, J., Severson, R. L., & Gill, B. (2006). The watcher and the watched: Social judgments about privacy in a public place. Human-Computer Interaction, 21(2), 235–272.

Graefe, A. (2016). Guide to automated journalism. Columbia Journalism Review. https://www.cjr.org/tow_center_reports/guide_to_automated_journalism.php

Graefe, A., & Bohlken, N. (2020). Automated journalism: A meta-analysis of readers’ perceptions of human-written in comparison to automated news. Media and Communication, 8(3), 50–59.

Hall, S. (2018). Can you tell if this was written by a robot? 7 challenges for AI in journalism. World Economic Forum. https://www.weforum.org/agenda/2018/01/can-you-tell-if-this-article-was-written-by-arobot-7-challenges-for-ai-in-journalism (Vol. 34).

Hamilton, J. T., & Turner, F. (2009, July). Accountability through algorithm: Developing the field of computational journalism. Report from the Center for Advanced Study in the Behavioral Sciences, Summer Workshop (pp. 27–41).

Hansen, M., Roca-Sales, M., Keegan, J. M., & King, G. (2017). Artificial intelligence: Practice and implications for journalism. Columbia Academic Commons. https://academiccommons.columbia.edu/doi/10.7916/D8X92PRD

Hassan, N., Sultana, A., Wu, Y., Zhang, G., Li, C., Yang, J., & Yu, C. (2014). Data in, fact out: automated monitoring of facts by FactWatcher. Proceedings of the VLDB Endowment, 7(13), 1557–1560.

Hawkins, R. P., Kreuter, M., Resnicow, K., Fishbein, M., & Dijkstra, A. (2008). Understanding tailoring in communicating about health. Health Education Research, 23(3), 454–466.

Hove, S. E., & Anda, B. (2005, September). Experiences from conducting semi-structured interviews in empirical software engineering research. In 11th IEEE International Software Metrics Symposium (METRICS’05) (pp. 10-pp). https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=1509301&casa_token=6NJbDgynszkAAAAA:5kZ8PSNFk2aGdMEuvKhecIdt21Fv9ZvLSGkf1ldkWmEAfvlznH3wKgwJseiHiJk_EhXLf3bbTaM&tag=1

Kim, D., & Lee, J. (2019). Designing an algorithm-driven text generation system for personalized and interactive news reading. International Journal of Human–Computer Interaction, 35(2), 109–122.

Klimashevskaia, A., Gadgil, R., Gerrity, T., Khosmood, F., Gütl, C. and Howe, P. (2021). Automatic news article generation from legislative proceedings: A Phenom-based approach. 9th International Conference on Statistical Language and Speech Processing (SLSP-21).

Kovach, B., & Rosenstiel, T. (2021). The elements of journalism: What newspeople should know and the public should expect (4th ed.). Crown.

Latner, M., Dekhtyar, A. M., Khosmood, F., Angelini, N., & Voorhees, A. (2017). Measuring legislative behavior: An exploration of Digitaldemocracy.org. California Journal of Politics and Policy, 9(3), 1–11.

Lewis, S. C., & Usher, N. (2013). Open source and journalism: Toward new frameworks for imagining news innovation. Media, Culture & Society, 35(5), 602–619.

Lin, B., & Lewis, S. (2021). The one thing journalistic AI might do for democracy. [Unpublished manuscript provisionally accepted at Digital Journalism].

Marconi, F., & Siegman, A. (2017). The future of augmented journalism: A guide for newsrooms in the age of smart machines. New York: AP Insights.

McClure Haughey, M., Muralikumar, M. D., Wood, C. A., & Starbird, K. (2020). On the misinformation beat: Understanding the work of investigative journalists reporting on problematic information online. Proceedings of the ACM on Human-Computer Interaction, 4(CSCW2), 1–22.

Napoli, P. M. (2014). Automated media: An institutional theory perspective on algorithmic media production and consumption. Communication Theory, 24(3), 340–360.

Nielsen, R. K., Newman, N., Fletcher, R., & Kalogeropoulos, A. (2019). Reuters institute digital news report 2019. Report of the Reuters Institute for the Study of Journalism.

O’neill, D., & Harcup, T. (2016). What is news?: News values revisited (again). Journalism Studies 18(7), 1470–1488.

Pavlik, J. (2000). The impact of technology on journalism. Journalism Studies 1(2), 229–237.

Pew Research Center (2021, June 29). Newspaper fact sheet. https://www.pewresearch.org/journalism/fact-sheet/newspapers/

Posetti, J. (2018). Time to step away from the “Bright, Shiny Things”? Towards a sustainable model of journalism innovation in an era of perpetual change. Reuters Institute for the Study of Journalism, University of Oxford. https://reutersinstitute.politics.ox.ac.uk/sites/default/files/2018-11/Posetti_Towards_a_Sustainable_model_of_Journalism_FINAL.pdf

Ramo, M. (2021). Where are the robojournalists? Towards Data Science. https://towardsdatascience.com/where-are-the-robojournalists-b213e475ca64

Riedl, M. O. (2019). Human‐centered artificial intelligence and machine learning. Human Behavior and Emerging Technologies, 1(1), 33–36.

Shaw, A., (2017). As statehouse press corps dwindles, other reliable news sources needed. Better Government Association. https://www.bettergov.org/news/as-statehouse-press-corps-dwindles-other-reliable-news-sources-needed/

Shilton, K. (2018). Values and ethics in human-computer interaction. Foundations and Trends® in Human–Computer Interaction, 12(2).

Trielli, D., & Diakopoulos, N. (2019, May). Search as news curator: The role of Google in shaping attention to news information. In Proceedings of the 2019 CHI Conference on human factors in computing systems (pp. 1–15).

WashPostPR. (2020, February 3). The Washington Post unveils new data-driven strategy for 2020 coverage. The Washington Post. https://www.washingtonpost.com/pr/2020/02/03/washington-post-unveils-new-data-driven-strategy-2020-coverage/

Weiss, S. (2015). 140 characters of news. State Legislatures, 41(7), 42.

Williams, A. T. (2017). Measuring the journalism crisis: Developing new approaches that help the public connect to the issue. International Journal of Communication 11, 4731–4743.

Patrick Howe is an Associate Professor of journalism at California Polytechnic State University. His research has explored native advertising, media management and algorithmic journalism. He has worked as an investigative reporter, editor and political correspondent for outlets including The Associated Press and the Arkansas Democrat Gazette. He is editorial adviser to Mustang News, Cal Poly’s integrated student media organization, and holds a master’s degree from the University of Missouri, Columbia.

Lindsay Grace is Knight Chair in Interactive Media and an Associate Professor at the University of Miami School of Communication. He is vice president for the Higher Education Video Game Alliance and the 2019 recipient of the Games for Change Vanguard Award. Lindsay’s book, Doing Things with Games, Social Impact through Design, is a well-received guide to game design. In 2020, he edited and authored Love and Electronic Affection: A Design Primer, on designing love and affection in games. He has authored or co-authored more than 50 papers, articles and book chapters on games since 2009.

Christine Robertson is Senior Advisor at the Institute for Advanced Technology and Public Policy. Prior, Christine held senior staff positions in California’s State Capitol including Chief of Staff to the Assembly Minority Caucus, Chief of Staff in the California State Senate, and Chief of Staff in the California State Assembly. She established and implemented policy, political, and communications priorities on behalf of the caucus. Christine earned Bachelor’s degrees in both Political Science and History at Cal Poly and a Master’s degree in Philosophy, Policy and Social Values from the London School of Economics and Political Science (LSE).

Foaad Khosmood is Forbes Professor of Computer Engineering and Professor of Computer Science at California Polytechnic State University. Dr. Khosmood’s research involves computational stylistics, natural language processing and artificial intelligence. He holds a Ph.D. in computer science from the University of California, Santa Cruz.