June 13, 2018 | Uncategorized

Machine learning & journalism: how computational methods are about to change the news industry

Mountains of garbage floating on a river and an urban landscape at the other edge. That’s how the creator of Deepnews.ai Frédéric Filloux described the Internet nowadays. He said that 100 million links flood the network every day and the journalism industry has room to “detect quality signals from such noise,” thus increasing “the economic value of great journalism.”

Filloux spoke about machine learning at the International Symposium on Online Journalism (ISOJ), held at the University of Texas, in Austin, in mid-April, 2018. He highlighted the relevance of finding ways to process online streams of information at scale by having a reliable scoring system to measure advertising, good recommendation engines, good levels of personalization and efficient curation/aggregation of products.

In fact, the history of data mining – and later advancements such as machine learning- is inseparable from digital-era advertising models like Amazon’s own-site recommendation engine based on previous purchases, or Google search’s Pagerank algorithm.

Filloux has applied a well-known method in the text mining field that combines deep learning techniques with human evaluation. He relied on state-of-the-art research on data mining to build a model with 20,000 parameters learned from 10 million articles. His model follows the big data approach by using a massive amount of data to train the algorithm that will ultimately find frequent patterns present in the millions of news pieces that build the data set. The novelty of Filloux’s work resides in applying techniques that are already quite widespread in data mining research in the specific field of journalism.

Filloux’s presentation took place during the first day of ISOJ as part of the panel “Trust: tools to improve the flow of accurate information,” which provided the audience with a broad view on how computational methods can be applied to improve journalistic practices – or just avoid traps. Joan Donovan, lead of research at Data & Society Research Institute, introduced the idea of source hacking, which is a tactic where groups coordinate to feed false information to journalists and experts. Regardless of the fact that good information is of the highest social interest at crises times, Donovan claims that wrong information usually spreads in less than 48 hours after an ongoing crisis is reported on breaking news.

Cameron Hickey, producer at PBS News Hour, also spoke about data mining approaches applied to journalism and presented the application NewsTracker. His main concern is identifying and following the activity of hundreds of fake news channels on the web. The ultimate goal is to algorithmically learn patterns to build a model to automatically identify such channels.

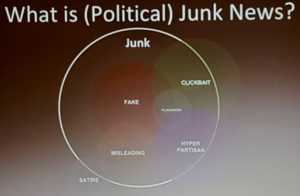

Hickey explained that he ought to use the term junk news, instead of fake news. He proposed looking at clickbait news, plagiarism, part of the hyper-partisan news, misleading information and the so-called fake news as part of the same huge bucket of junk news.

His main research hypothesis is that “if we can combine algorithmic and manual approaches to classify junk content, then in the future we will be able to reliably identify new sources of junk automatically.” He learned that many users who follow a single junk news page tend to have many other similar pages on their newsfeeds, too. Therefore, by identifying one follower of such a page, it is possible to reach many others and to find an extensive list of junk news sources through thesnowball effect.

Through this method, Hickey and his team created a junk score, to measure people’s inclination towards this kind of online content. The study has detected emerging domains at a rate of 80+ per month, in a total of 4,000 domains suspected of spreading junk news so far.

Information reliability is also the concern of the Vanderbilt University professor Lisa Fazio. She found out that peripheral information is often ignored when people read news online. Although traditional design gives room to secondary information on the peripheries of the printed area, such pieces of text and visual references also hold relevant information about the original source.

Fazio presented a study in which she ran three experiments with hundreds of participants. They read between 1 to 10 news articles and then were asked about what they remembered about what they had just read. Some participants were asked the same question one week after the first experiment.

Fazio presented evidence that logos are rarely noted or remembered and the number of logos shown on a piece didn’t seem to influence readers’ perceptions about its credibility. She also highlighted that participants had difficulties in remembering specific details of what they read even when they could remember its general idea.

This post is part of a series of articles written by exploratory students from the UT Austin | Portugal Digital Media program. These doctoral students, coming from both the University of Porto and the New University of Lisbon were sponsored by TIPI to spend 10 days at UT to further their research by meeting with various UT faculty. They carefully timed their visits to coincide with the ISOJ conference, considered an important part of their exploratory visit.

*Marcela Canavarro, PhD candidate at the Laboratory of Artificial Intelligence and Decision Support (LIAAD) at INESC TEC/U.Porto. Marcela is currently researching political mobilization on social networks in Brazil.

*Marcela Canavarro, PhD candidate at the Laboratory of Artificial Intelligence and Decision Support (LIAAD) at INESC TEC/U.Porto. Marcela is currently researching political mobilization on social networks in Brazil.